How AI Can Make Credit Scoring Fairer: A Guide for Lenders

Learn how to use alternative data to build fairer, more profitable credit models. And stay ahead of regulations.

Since the 1960s, landmark regulations like the Fair Housing Act and the Equal Credit Opportunity Act have aimed to prevent discriminatory lending. But even with these laws, biases can persist.

Today, AI offers a new path. In this post, we’ll walk through four practical tips on how lenders can use AI to build a more inclusive credit system.

After that, you’ll find a step-by-step blueprint with clear instructions to help risk teams put these practices into action.

Tip #1. Build fairness on stronger borrower data

Before we can talk about how data can drive fairness, we need to look at the limits of the traditional data lenders still rely on.

Why legacy credit models leave borrowers behind

Traditional credit models often rely on a narrow set of data. This includes FICO scores and past credit history.

These models can reflect and even worsen historical biases. These models can reinforce historical biases by penalizing people simply for lacking a credit history.

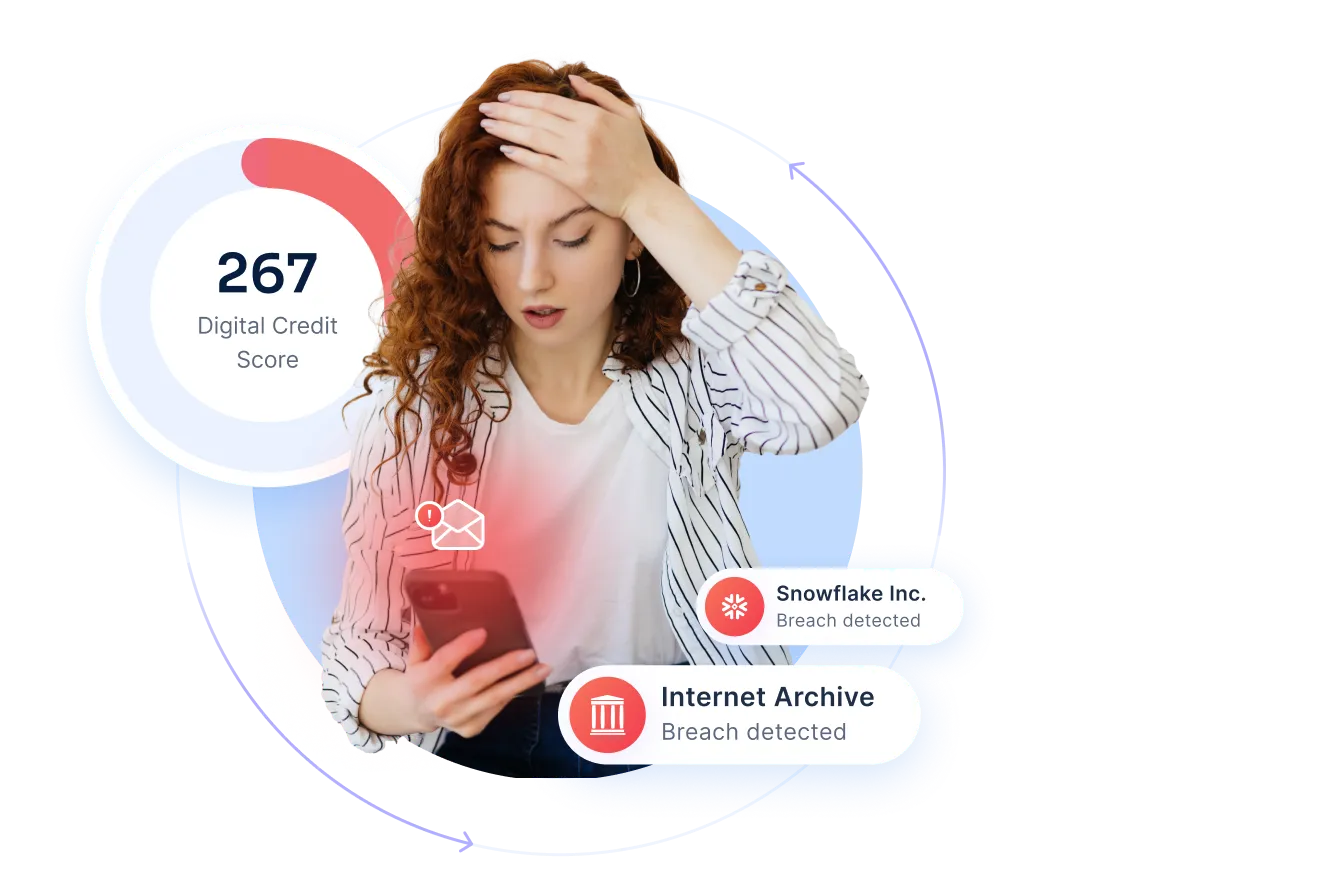

This creates a “data desert”, also known as credit invisibility, for many worthy borrowers. This includes recent immigrants, gig economy workers, and young adults.

Their financial lives don’t fit the old system. They are often unfairly excluded.

The solution: using alternative data for financial inclusion

A more complete picture comes from alternative data. This means using new data sources to gauge creditworthiness.

Think of signals like consistent rent and utility payments. You can also analyze cash flow or look at educational and career history. This richer dataset provides a clearer view.

For example, a student might have a thin credit file but has always paid their rent on time. An AI model using alternative data can see their reliability.

Conversely, a person with a high credit score might have recently registered with multiple gambling platforms. This is a risk traditional models could overlook.

Alternative data lets lenders score credit-invisible people, opening the door to fairer, more inclusive lending.

Tip #2. Turn algorithms into decisions you can act on

To see how algorithms reshape lending, let’s start with their role in turning raw data into meaningful insights.

The role of algorithms in AI credit scoring

AI and machine learning are changing how we assess credit risk.

These advanced algorithms can analyze massive datasets in seconds. They process far more information than any human could.

This allows lenders to move beyond simple numbers and gain a deeper understanding of a borrower's financial behavior.

But the real advantage comes from how the data is interpreted. It allows turning raw patterns into underwriting decisions that balance risk and opportunity.

Transition to AI-powered credit scoring models

The credit industry is shifting away from older, static scoring methods that use fixed rules to evaluate risk.

In contrast, new AI/ML models are dynamic and predictive. They learn from new data and adapt over time.

This leads to more precise risk assessments and greater efficiency. Lenders can make faster, more accurate decisions. This benefits both the business and the consumer.

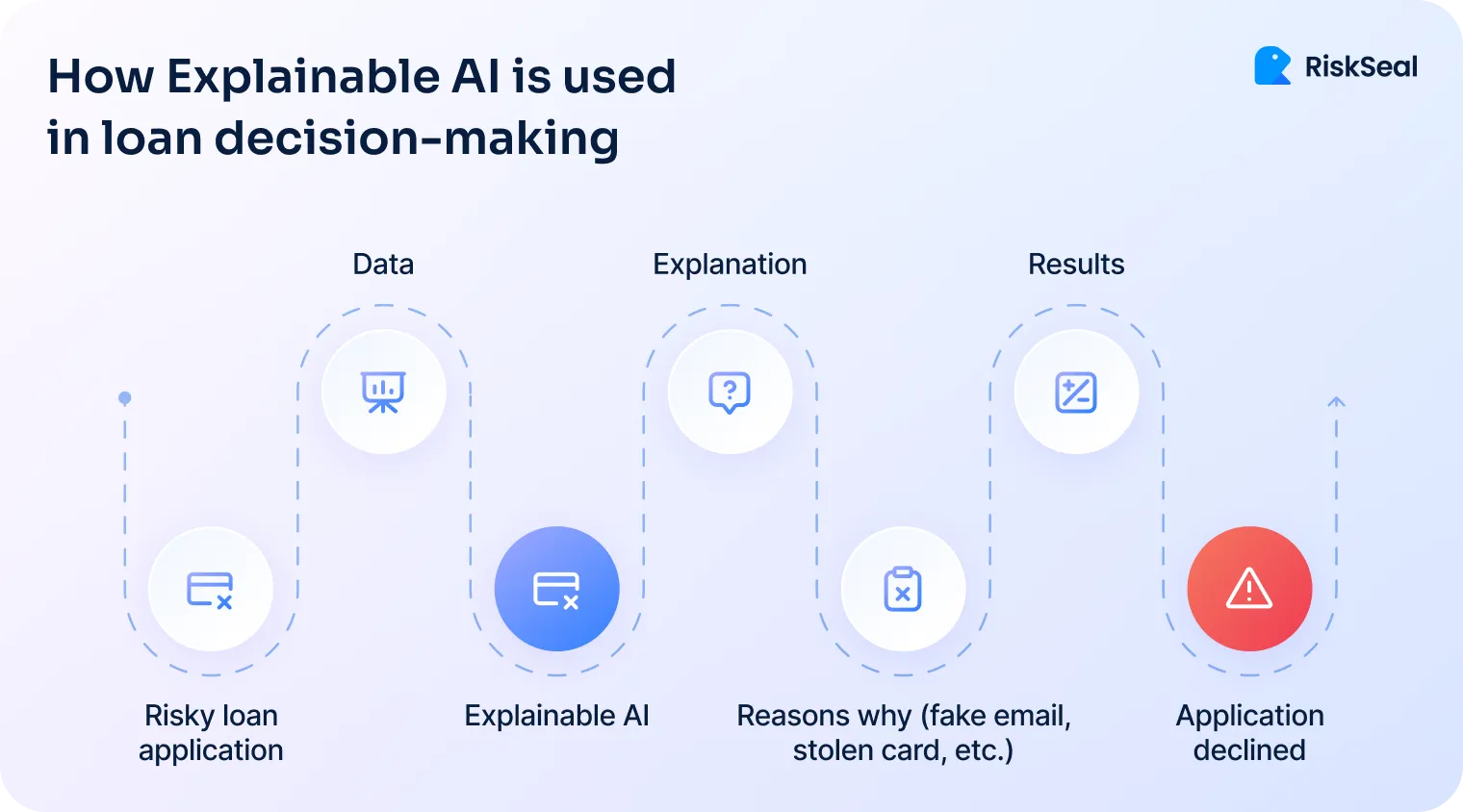

Making AI credit risk models transparent and explainable

In a regulated industry, you can’t rely on a “black box” model. Every loan decision must be explainable. Not only for compliance, but also to build consumer trust.

Borrowers deserve clear, actionable reasons when they’re denied credit. This is why lenders are adopting Explainable AI (XAI) and strong model governance practices.

These tools make complex outputs easier to interpret and create audit trails for every decision.

The goal isn’t to weaken AI models but to make them transparent and trustworthy. This helps risk teams meet regulations while ensuring fairness in every decision.

Tip #3. Make race and ethnicity bias checks a standard

One of the clearest examples of why race and ethnicity estimation matters is its role in regulatory compliance.

Complying with the Equal Credit Opportunity Act (ECOA)

Lenders are legally required to operate without discrimination. Regulations like the Equal Credit Opportunity Act (ECOA) strictly prohibit using an applicant's race, ethnicity, or gender in credit decisions.

However, these same laws mandate the collection of this data for monitoring purposes. Credit providers must report this information to regulators.

The primary goal is to detect and prevent discriminatory lending patterns across the entire industry.

This practice ensures a system of checks and balances, holding institutions accountable.

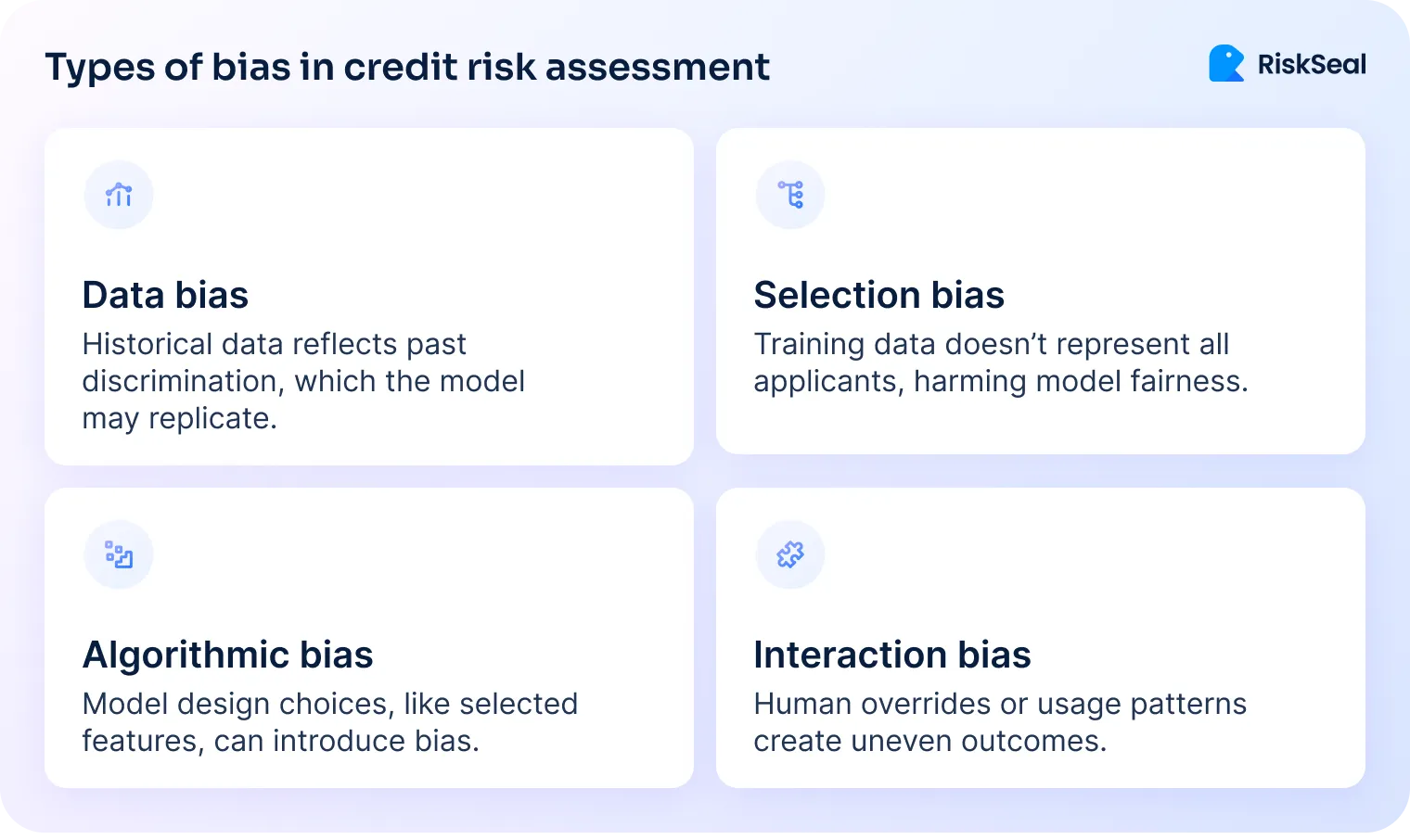

Addressing discrimination with disparate impact analysis

Lenders and risk teams use this data to test whether seemingly neutral lending policies unintentionally disadvantage a protected group.

For instance, an algorithm could deny a lot of applicants from a certain neighborhood. If that neighborhood is mostly made up of minorities, the model could end up being discriminatory.

Credit risk managers do not use this demographic data to make individual decisions. Instead, they use it to test and audit their AI models for bias.

By doing so, they can identify and correct any unintended bias within their algorithms. This process helps AI become a tool for fairness. Not a system that reinforces existing inequities.

The ultimate goal is to build a system where all applicants are evaluated on their individual financial merit, not on their background.

Tip #4. Go beyond minimum compliance requirements

Meeting fair lending regulations is a must. But true fairness requires a proactive approach. It's about building a system designed for equity from the ground up.

The importance of true fairness

Simply checking a box for compliance is no longer enough to achieve real equity and inclusion.

Lenders must go beyond minimum standards and actively design their systems to produce fair outcomes for all applicants.

This shift frames fair lending not just as a regulatory burden but as a competitive advantage.

When lenders build a reputation for fairness, they can attract and retain a broader, more loyal customer base.

Ultimately, it’s also an ethical responsibility to ensure that financial opportunities are accessible to everyone.

Techniques for proactive data governance in AI

To achieve this, lenders are leveraging new technologies for continuous monitoring. Tools like Lender Data Analytics (LDA) perform ongoing audits of lending portfolios.

These systems can quickly identify potential fair lending risks in real-time. This allows risk teams to address them before they become a problem.

Such a proactive approach is supported by robust data governance and independent model validation teams. These teams ensure that AI models remain unbiased and perform as intended.

Continuous oversight helps credit providers maintain fairness and trust in an AI-driven lending environment.

A smart strategy for lenders is to work with alternative data providers like RiskSeal that use Explainable AI (XAI). By enriching risk assessment models with non-traditional data, we give lenders a fuller view of each applicant.

Our scores come with built-in XAI, so risk teams can see exactly how decisions are made. That transparency supports compliance and makes it easier to extend credit to a more diverse customer base.

A step-by-step blueprint for fair lending with AI

Creating an equitable lending system with AI requires a deliberate strategy.

Here is a step-by-step blueprint for risk teams to follow.

Step 1. Define your fairness objectives clearly

Fairness in lending goes beyond basic regulatory compliance. Set measurable and specific goals that align with both compliance and inclusion.

For example, your objective could be to reduce the loan rejection rate for thin-file borrowers by 15%. Or to approve a certain percentage of first-time borrowers from underserved communities.

Align these goals directly with your business objectives. This ensures that your pursuit of equity also drives business growth.

It shows that fairness and profitability can go hand in hand.

Step 2. Audit your data inputs

Your AI models are only as good as the data they use.

First, identify sources of potential bias in your existing data, such as incomplete credit histories or outdated bureau data. Next, enrich your datasets with alternative data.

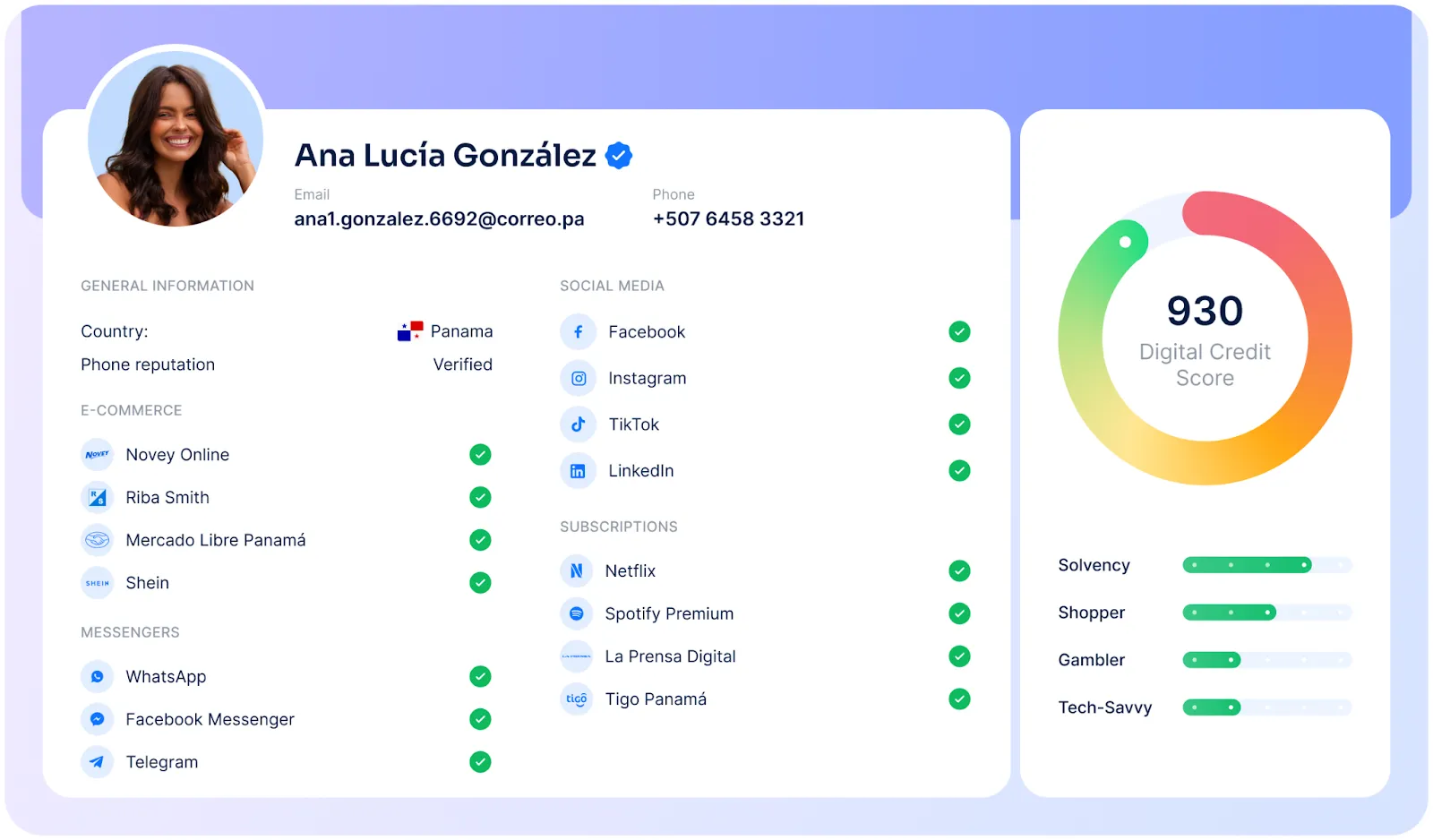

We recommend collecting data points like:

- email and phone lookup data

- IP and location cross-checking

- social media and messenger presence

- e-commerce activity

- subscriptions

In our experience at RiskSeal, this approach provides a far more accurate picture of a borrower's reliability. Particularly for those with limited traditional credit.

Step 3. Build explainable AI models

Do not settle for "black box" algorithms. Prioritize building transparent, explainable AI models from the start. Ensure that the outputs of your models are easily interpretable.

This can be done with transparent ML methods. Or by using API-based credit risk scoring solutions that both ethically collect data and fairly interpret it.

This transparency is crucial for your compliance teams who must be able to explain every lending decision. And for applicants deserving a clear reason for an approval or denial.

Step 4. Test for bias continuously

Fairness is not a one-time check. You must continuously test for bias.

Regularly perform disparate impact analysis to detect if your lending policies disproportionately affect certain groups.

If you discover patterns of unfairness, take action:

- adjust model features

- retrain the algorithm with a more balanced dataset

- change the weights of certain variables to correct the bias

Step 5. Integrate fairness into monitoring

Treat fairness as a core performance metric, just like accuracy and risk. Integrate it into your ongoing monitoring dashboards.

We at RiskSeal have found that this approach ensures fairness remains a key priority.

Schedule periodic audits and model recalibrations. This ensures your lending decisions stay equitable as economic conditions and borrower behaviors evolve.

This proactive oversight is the final piece of a truly fair and responsible AI-powered lending system.

How RiskSeal utilizes AI to make credit scoring fairer

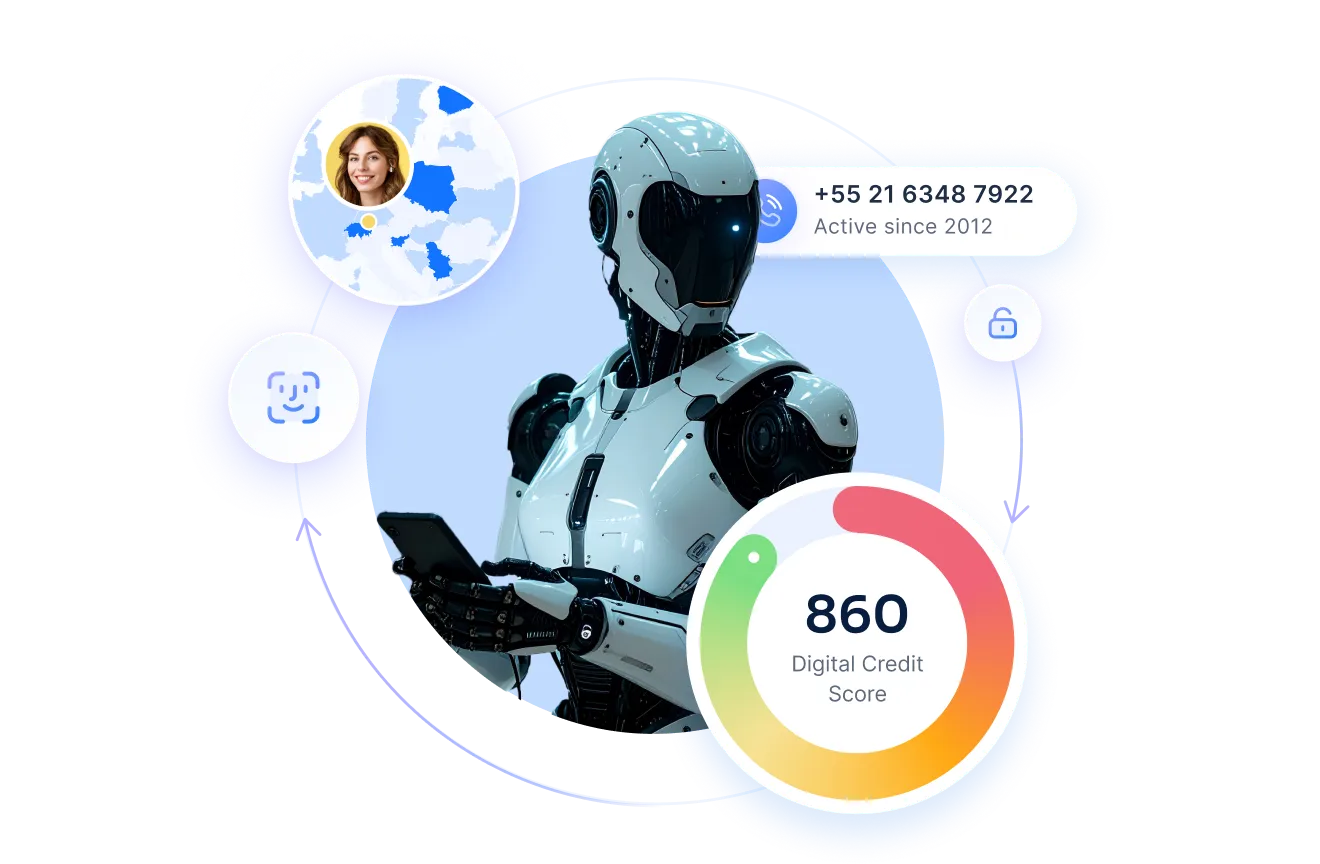

RiskSeal applies AI to credit scoring in a practical and compliance-friendly way.

Our approach combines alternative data and full transparency to help lenders make fairer, faster, and safer decisions.

Here’s how:

- Actionable alternative scores. AI transforms raw alternative data, such as digital footprint and subscriptions, into powerful variables.

- Model optimization. Machine learning detects repayment patterns and fraud risk that traditional credit bureau data alone would miss.

- Real-time decisioning. Our API delivers instant risk scores in seconds, supporting digital-first lending without delays.

Every model is explainable and transparent, avoiding the black-box problem.

Lenders see exactly how each decision is made. This ensures compliance with regulations and builds trust with customers.

The result is better outcomes across the board: more approvals for good borrowers, fewer defaults, and stronger protection against fraud.

Key takeaways

The key to achieving fairness in lending is not to avoid AI, but to apply it with purpose.

By using alternative data, prioritizing explainable models, and implementing continuous bias testing, lenders can build a more equitable and profitable system.

This proactive approach transforms fair lending from a regulatory challenge into a powerful driver of innovation and social good. This ultimately expands financial access for everyone.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

.svg)

.webp)

.webp)