What Lenders Gain by Using Explainable AI in Risk Assessment

Dive into explainable AI in credit scoring to boost transparency, reduce bias, build trust, and ensure full regulatory compliance.

.webp)

Many companies rely on artificial intelligence when making decisions. Credit institutions are no exception.

But there is one issue. Many of them still implement black box AI algorithms in their credit scoring processes, leaving both themselves and borrowers unaware of the reasons behind certain decisions.

In this article, we will discuss why it is so important for lenders to prioritize AI explainability.

What can it change for them? Why is it better to abandon the use of opaque algorithms?

Read on.

The role of data in explainable credit decisioning

Reliable data forms the foundation of any AI credit scoring system. The validity of any AI-driven decision depends on the quality of the data it is based.

Structured data enables the creation of interpretable features. It simplifies the mapping between input variables and credit outcomes.

Why data quality and accuracy are critical for AI credit models

An AI model performs effectively only when it has access to accurate and high-quality information.

Distorted or inconsistent input data can lead to faulty predictions. As a result, the lender may receive a questionable credit decision.

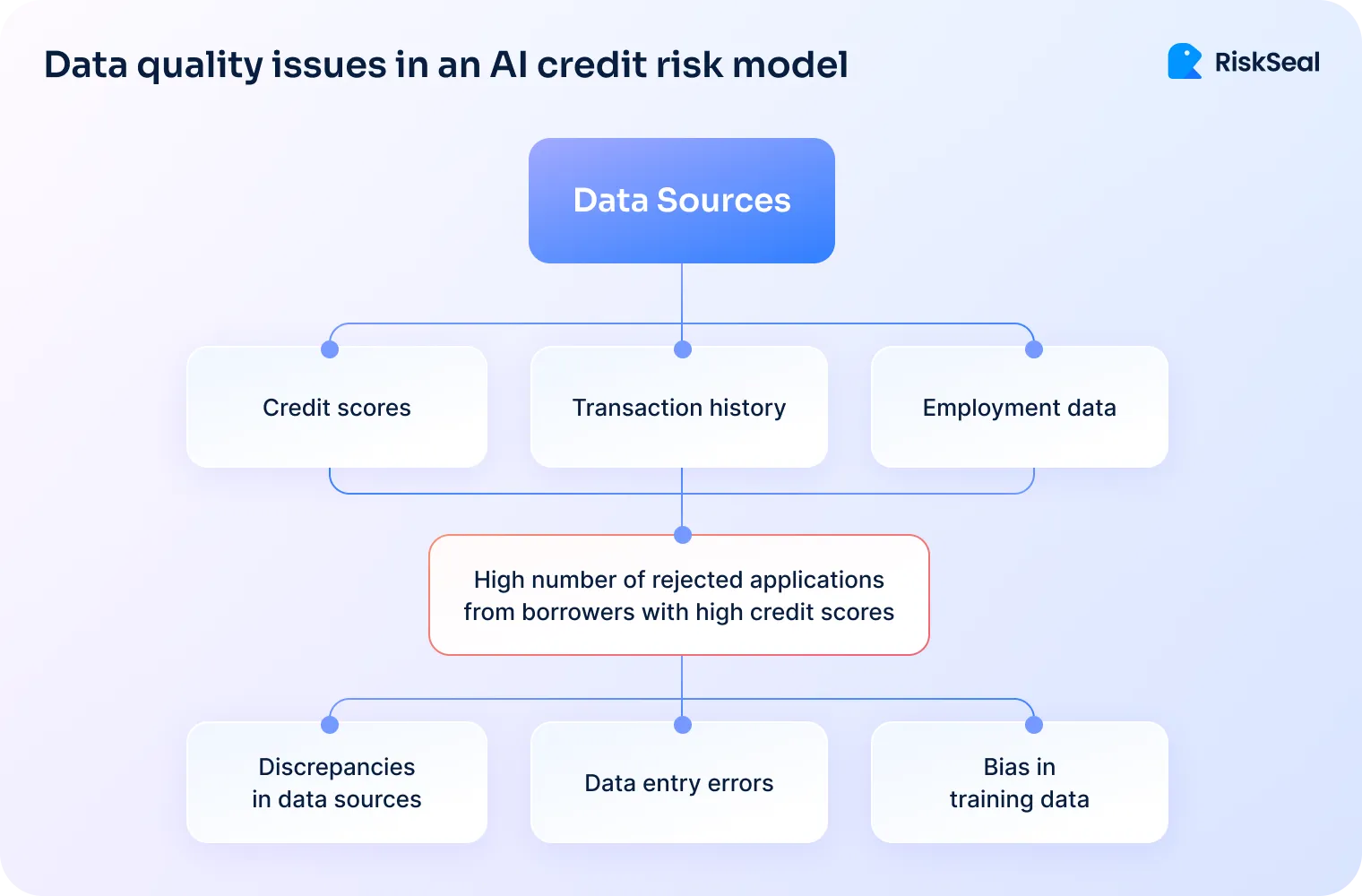

Case study. Data issues in AI credit models

Let’s consider the importance of these criteria with a specific example.

A digital lender uses AI in credit risk management. The implemented model assesses the probability of default based on the following data:

- Credit scores

- Transaction history

- Employment data

However, the lender has recently noticed a high number of rejected applications from borrowers with high credit scores.

A thorough audit helped identify issues with the quality and accuracy of the input data:

1. Discrepancies in data sources. The model relied on two databases. One was updated daily, the other monthly. Because of this, the credit scores of some applicants were outdated, leading to inaccurate risk assessments.

2. Data entry errors. Some credit applications contained mistakes due to manual input. For example, one applicant’s annual income was entered as $4,500 instead of $45,000. This affected the debt-to-income ratio calculation.

3. Bias in training data. The model was trained on data from salaried employees. This led to a bias against borrowers with stable income who are not officially employed. For example, freelancers or self-employed individuals.

The role of data transparency in fair credit assessment

Credit risk assessment requires clear visibility into the data used by AI models.

This allows managers to track which data points influenced the decision. For example, credit scores, income levels, payment history, or other financial indicators.

This kind of traceability builds trust and accountability.

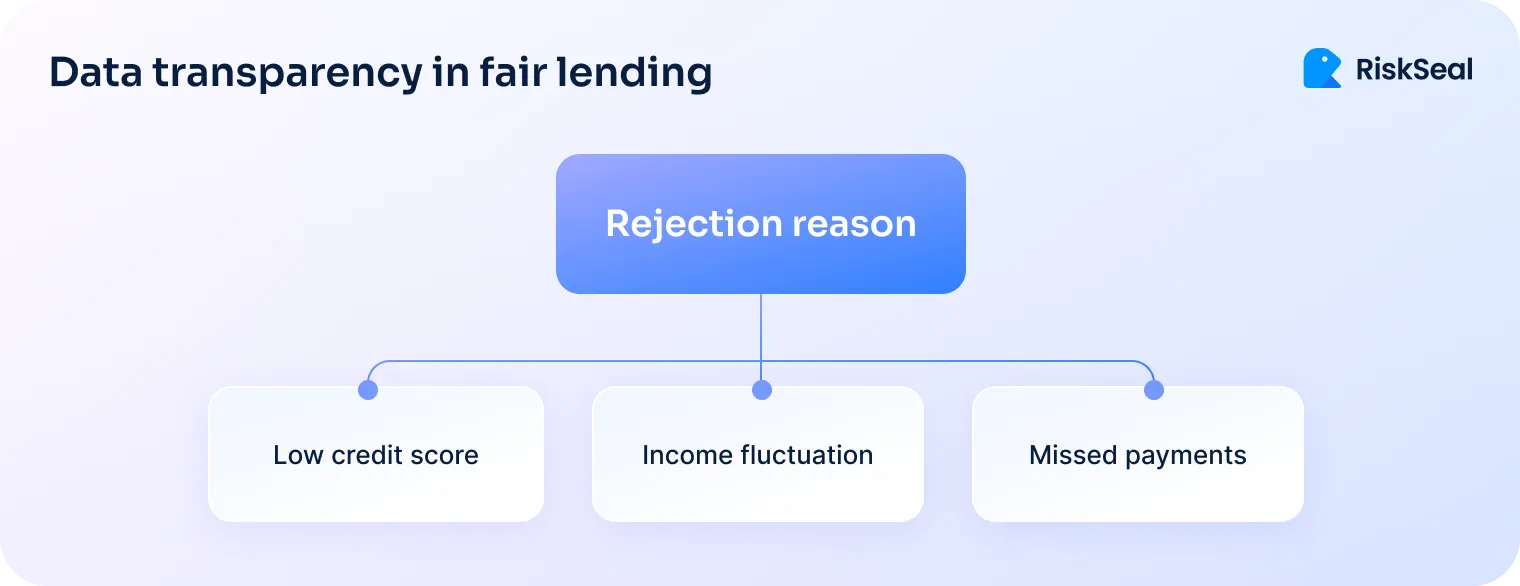

Case study on AI credit decisions with transparent data

Imagine a neobank that uses an AI model to evaluate credit applications. A small business owner applies for a loan but receives a rejection.

Credit risk managers must clearly state why the application was denied. And preferably not just at the level of “You were denied due to low creditworthiness.”

AI models that use transparent data make this possible.

Systems based on them provide a clear breakdown of the data points that influenced the decision:

Credit score: 640 (below the bank’s threshold of 680).

Income stability: income fluctuated over the past 12 months, with a 30% drop in Q4.

Payment history: two missed payments in the past six months on the business credit line.

Debt-to-income ratio: 45% (above the allowable limit of 40%).

This transparency allows applicants to understand why they were rejected and assures lenders of the model’s fairness.

How data categorization shapes AI decision making

Not all data points carry the same weight.

Understanding how they are categorized in decision-making models is key to effectively explaining AI-driven outcomes.

Highlight the variables that had the greatest impact, and you can dispel myths about complex decisions.

Case study. When credit score matters more than income

Consider a creditworthiness assessment AI model used by a fintech lender. It uses multiple data points with different weights.

Suppose a loan application is evaluated using the following data points:

- Credit score (weight: 40%)

- Debt-to-income ratio (weight: 25%)

- Payment history (weight: 20%)

- Income stability (weight: 15%)

According to this data hierarchy, a person who pays bills on time and has stable earnings may still be rejected if their credit score is weak and debt-to-income ratio is high.

When evaluating an AI-based decision, a manager will consider the following breakdown:

- The lending decision depended 40% on the borrower’s credit score. Their score (600) was significantly below the acceptable threshold, contributing to a higher risk rating.

- The debt-to-income ratio was the second most influential factor. At 50% (above the acceptable limit of 40%), it increased the risk, despite stable income.

Data categorization and prioritization in decision-making allow the manager to provide a comprehensive explanation for the denial, and point out areas for improvement to the applicant.

Data management best practices that support regulatory compliance

Data management is an essential aspect of financial institutions’ operations. This issue has become especially relevant with the tightening of regulation in this area.

Credit risk managers must ensure that the data used in AI systems complies with regulatory standards, and can be easily verified when needed to explain decisions.

Case study. AI compliance in banking

Let’s consider this in the context of a regional bank that uses artificial intelligence to assess risk.

Its scoring model uses the following data:

- Credit history

- Income

- Employment status

- Spending patterns

With the introduction of new financial regulations, the bank must ensure that the AI system complies with data privacy and transparency standards.

This is the role that data management plays:

1. Data access control. The bank defines the group of individuals who are allowed to view, edit, or export clients’ confidential data.

This helps prevent unauthorized use or manipulation.

2. Data lineage tracking. The AI model tracks the source of every data point. It allows the identification of:

- When and where the data was collected

- How it was processed

- What changes were made

This enables the bank to verify the objectivity of the decision. It also allows the bank to monitor whether data processing complies with regulatory requirements.

3. Audit trails and reporting. The bank automates the generation of reports that include:

- Credit decisions generated by AI

- Model input data

- Model output data

Based on these reports, credit risk managers can provide information about how specific data points influenced the decision.

This data can also be used to confirm that prohibited attributes — such as race or gender — were not used in credit scoring.

All of this can be useful during regulatory audits.

4. Data retention policies. The bank establishes data retention protocols to comply with laws on confidential information security.

These protocols involve securely storing data for five years. After that period, the information is either anonymized or deleted to minimize risk.

Implementing such practices allows the bank to:

- Comply with regulatory requirements.

- Strengthen stakeholder trust.

- Ensure that credit decisions remain explainable, understandable, and legally compliant.

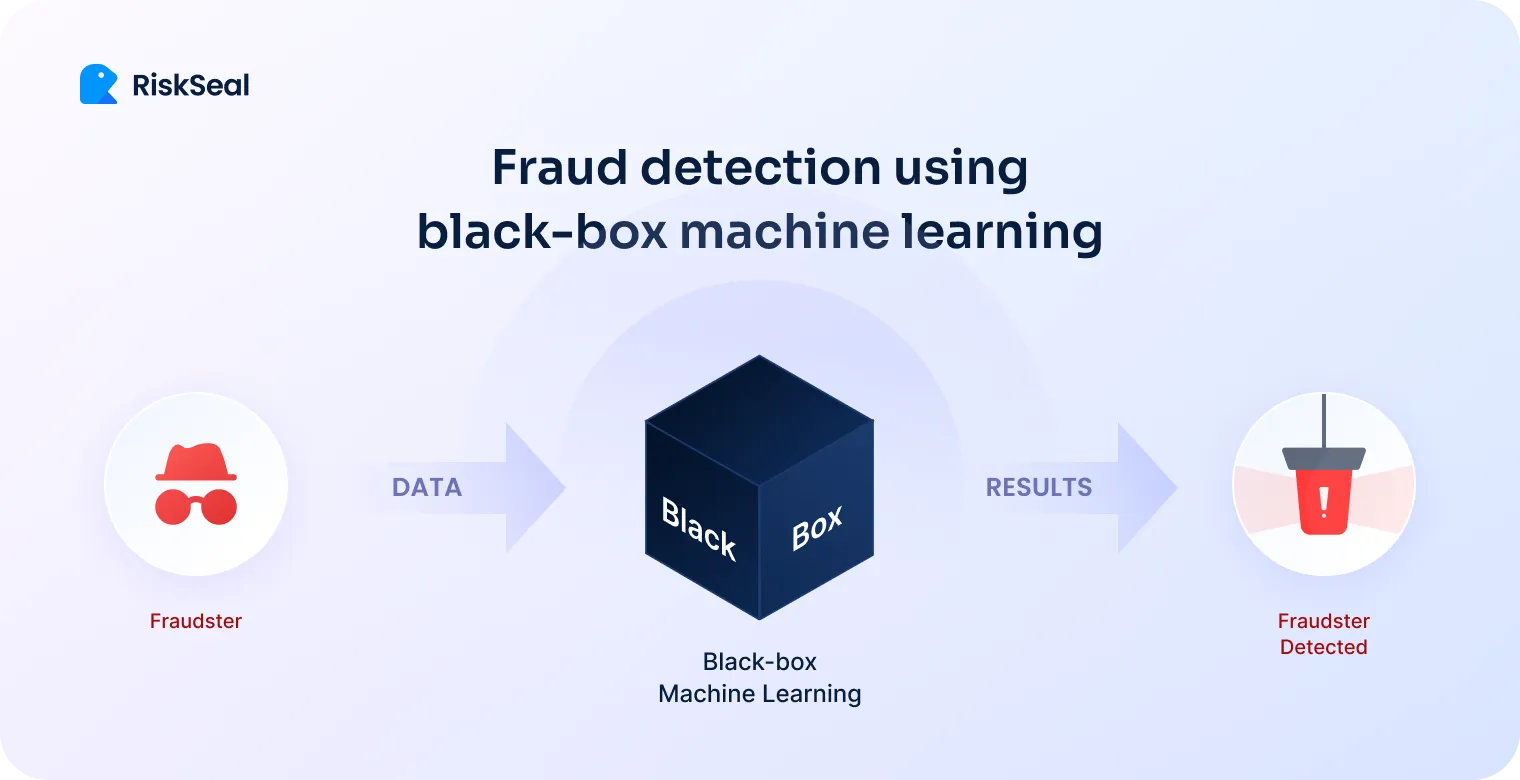

White box AI vs. Black box AI in credit scoring

In this section, we suggest taking a closer look at the theory behind AI-based credit scoring.

This term refers to the implementation of artificial intelligence algorithms and machine learning methods to assess a borrower's creditworthiness.

So, what is machine learning? This AI discipline focuses on creating algorithms capable of learning from various data sets.

These algorithms and models can have either a black box or white box nature.

Let’s look at how they differ:

Whitebox machine learning enables credit institutions to demonstrate fairness and accountability in decision-making.

Its implementation is also key to ensuring regulatory compliance, increasing consumer trust, and reducing reputational and legal risks.

Explainability in AI = fair lending

White box AI benefits for risk management include:

#1. Compliance with legal requirements

AI credit scoring based on white-box ML allows lenders to comply with key legal acts in the area of data privacy and lending, namely:

- The Equal Credit Opportunity Act (ECOA)

- Fair Lending laws

- The General Data Protection Regulation (GDPR)

These frameworks require that consumers understand the reasons behind lending decisions. It is also crucial to prevent the adoption of discriminatory or unfair practices.

Explainable AI makes full compliance with these requirements possible. It ensures decision explainability, both for lenders and borrowers.

#2. Reducing bias

If AI models are trained on historical or limited datasets, they may retain bias. White-box ML helps solve this problem.

Thanks to transparency and explainability, a lender can identify signs of discrimination across different demographic groups. They can understand how various factors affect the decision and adjust unfair models accordingly.

This promotes equal access to credit.

#3. Implementing fair lending practices

Ethical use of AI in lending involves aligning algorithms with human values and legal norms.

White box AI is the technology that supports ethical practices. It allows stakeholders to understand, challenge, and improve lending decisions.

As a result, we get a more responsible, human-centered lending system.

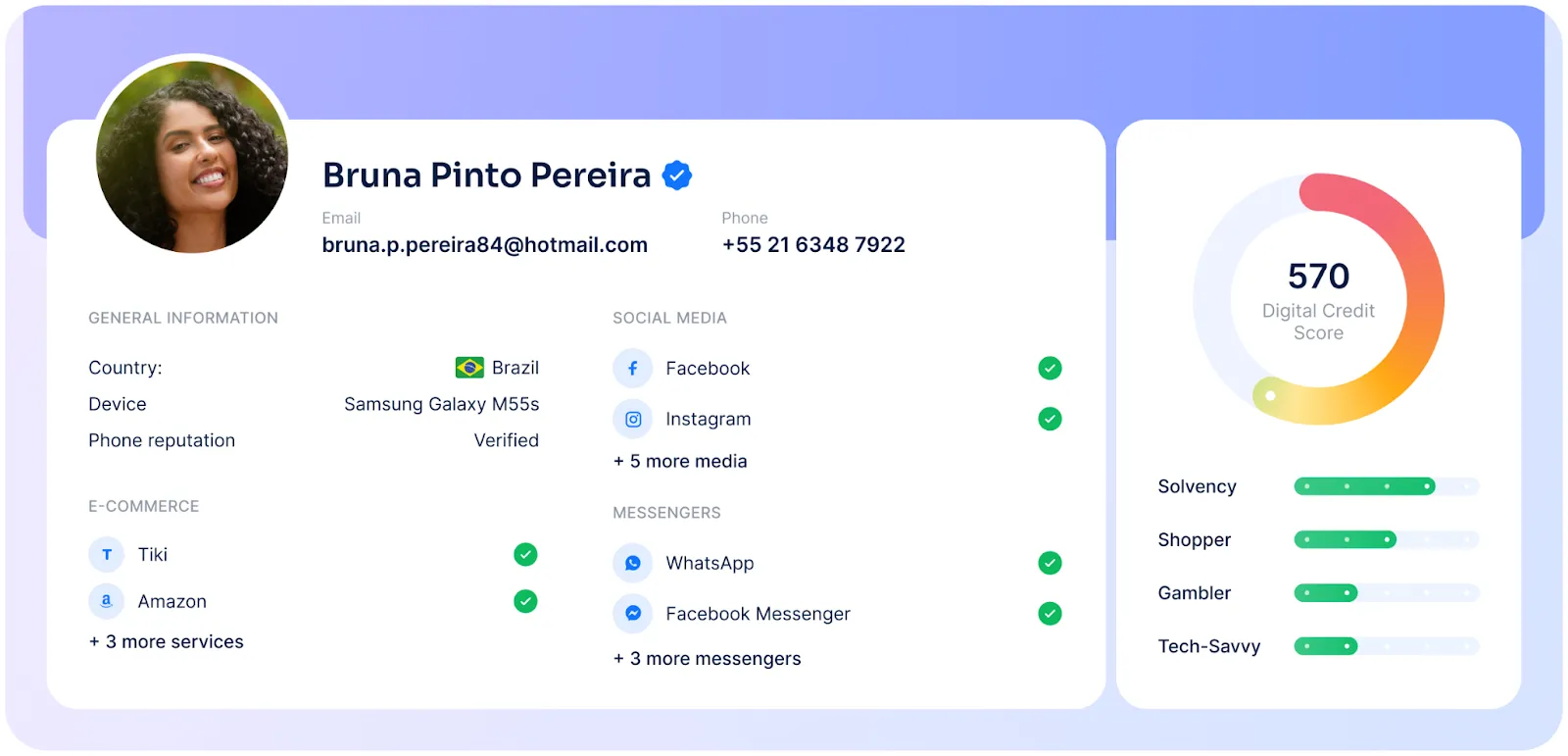

RiskSeal's commitment to fair lending

RiskSeal is committed to ensuring transparency in every credit decision we make. We use explainable AI in our models, which makes our system transparent and accountable.

White-box models at RiskSeal

Our scoring system is built on white-box machine learning models. This means that every credit decision made by our AI is fully explainable.

Clients not only receive the final creditworthiness assessment but also gain access to the exact formula and underlying data points that led to that decision.

This level of transparency fosters trust and ensures that every decision can be clearly understood and justified.

Compliance through explainability

In the financial sector, compliance is critical.

Regulations are increasingly requiring lenders to clearly explain how credit decisions are made, especially when AI is involved.

RiskSeal’s white-box AI meets these standards by design.

It not only delivers credit assessment results but also provides easy-to-understand explanations of how those results were reached.

This transparency reduces regulatory risk and builds trust.

It also reinforces RiskSeal’s position as a leader in ethical and responsible AI.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

.svg)

.webp)

.webp)

.webp)