How AI Credit Scoring Is Changing the Lending Industry

Discover how AI is changing credit scoring with real-time data, boosting accuracy, reducing risk, and improving financial inclusivity.

The lending industry has changed a lot. Financial organizations are focusing on automating credit scoring by integrating artificial intelligence (AI) into their systems.

According to Statista, the AI market in the fintech sector was valued at $43.8 billion in 2023.

By 2029, this figure is expected to grow by another $7 billion, reaching a record high of $50.9 billion.

This trend can be explained by the extensive opportunities that AI opens up for players in the lending market. Among other things, it allows for the optimization of credit risk assessment, improves financial inclusivity, and effectively combats fraud.

At RiskSeal, we understand the opportunities that artificial intelligence offers to lenders. Like many software development companies, we’ve placed this technology at the core of our credit scoring platform.

In this article, we will discuss how AI is transforming traditional credit scoring models by utilizing more dynamic data sources, such as digital footprints and behavioral analytics.

What is artificial intelligence credit scoring?

AI credit scoring refers to the use of AI algorithms and machine learning (ML) methods to assess a person's creditworthiness and reliability.

The traditional credit assessment process relies strictly on the borrower’s financial information, whereas AI allows for the use of a wide range of alternative data for credit scoring.

Here are some examples:

AI credit scoring education and work experience

- Transaction history

- Income amount and its relation to expenses

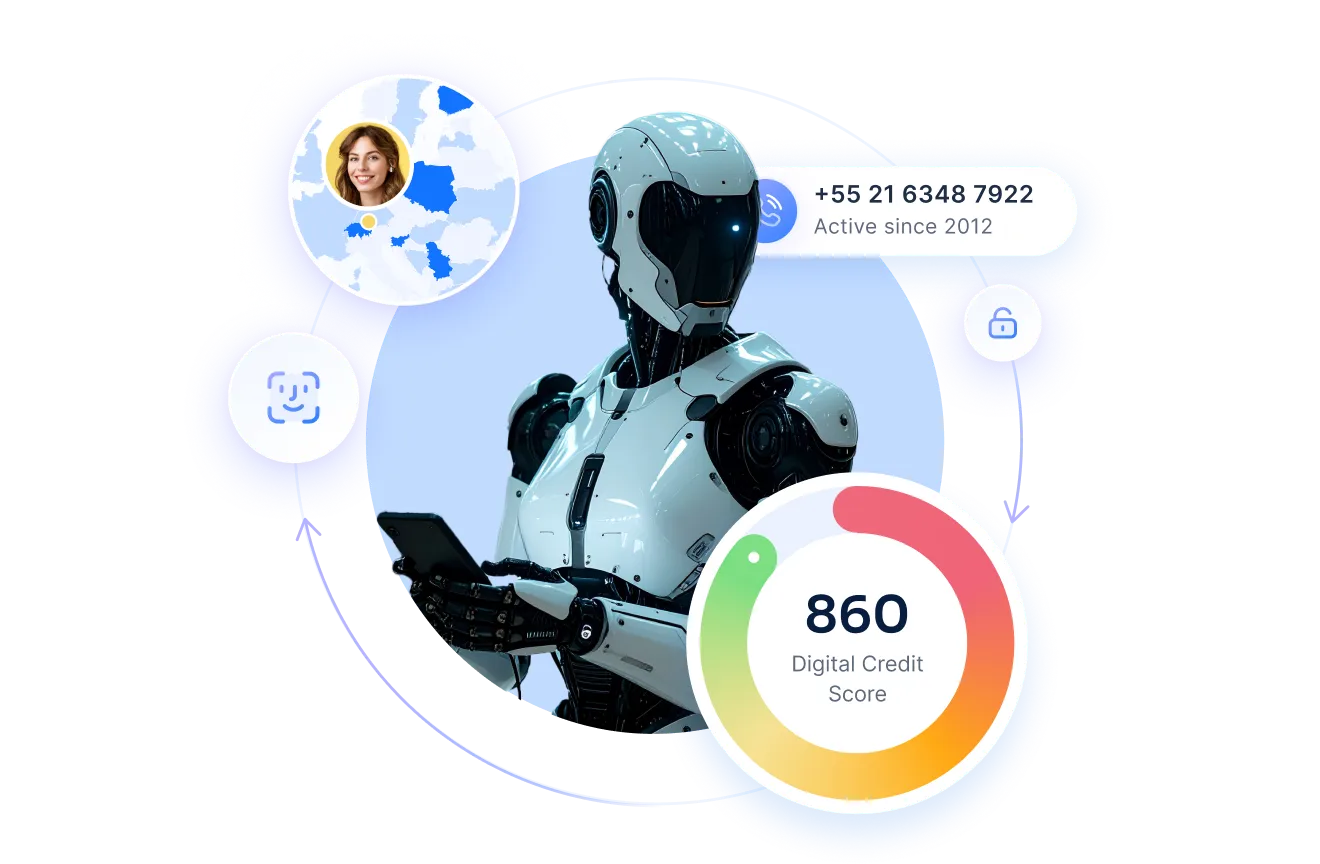

- Applicant’s digital footprints (social media activity, IP address data, phone number, etc.)

AI-driven credit scoring provides more accurate results. It offers lenders a personalized digital credit score for applicants in real time.

How does AI scoring operate?

The use of AI in modern credit risk management is a fundamentally new approach to assessing borrowers' creditworthiness.

Artificial intelligence allows for a departure from traditional credit score criteria, which include financial history and consumer ratings.

Instead, a complex machine learning credit score algorithm is used for credit decisioning, analyzing information obtained from alternative data providers.

The operation of artificial intelligence scoring is based on the use of ML models to process large datasets. This process is carried out in several stages:

- Comprehensive data collection

- Training machine learning models

- Implementing predictive analytics

- Continuous improvement of algorithms

#1. Comprehensive data collection

Access to diverse information about the consumer is the foundation of AI loan approval.

To gain a complete picture of an individual's financial behavior, systems analyze various data sources. This includes online transaction history, social media activity, mobile operator data, and more.

#2. Training machine learning models

AI credit tools are based on machine learning models. These models can detect patterns and relationships by learning from large datasets.

There are several types of training for such models:

Supervised learning - uses labeled data.

Unsupervised learning - occurs without human guidance on which data to use.

Reinforcement learning - involves deriving results from a model's interactions within a defined environment.

Each of these approaches enables scoring systems to study and analyze thousands of data points. This allows for the identification of non-obvious factors influencing the likelihood of debt repayment by the borrower.

#3. Implementation of predictive analytics

After training, the ML credit risk rating model can predict borrower behavior using data analysis methods such as predictive analytics.

The algorithm of actions for the AI-based scoring system at this stage is as follows:

- A new loan application is received.

- The system performs a credit risk analysis based on previously studied patterns.

- Based on various factors, an AI score is generated, reflecting the individual's creditworthiness. For example, at RiskSeal, it’s a ready-to-use Digital Credit Score.

#4. Continuous improvement of algorithms

There is a significant advantage of AI credit scoring over traditional creditworthiness assessments. AI-based systems can maintain relevance through continuous learning.

For example, these systems gather information on the repayment of previously issued loans and analyze the accuracy of the assessments made.

Based on the data collected, they improve their algorithms. This contributes to objective credit decisioning.

The challenge of black box AI in credit risk decisioning

At the current stage of AI technology implementation, there is a problem that is particularly pressing for lenders.

It concerns the fact that most ML models have a "black box" nature, meaning that their operational principles are not understandable to humans.

It looks like this:

Here’s how credit risk decisioning is conducted using black box AI:

1. Submission of a loan application. A new application is received by the system, containing client data for analysis.

2. Execution of the credit score calculation algorithm. The system studies and analyzes the input data.

3. Providing the results of the AI score check to the user. The lender receives an AI rating for the potential borrower but does not understand how that result was obtained.

Consequently, the implementation of "black box" AI models faces certain challenges:

Compliance with regulatory requirements. Regulatory bodies emphasize the need for transparent and explainable AI models.

Therefore, the use of black box AI does not allow lenders to meet this requirement.

Decreased level of trust. A lack of transparency can lead to a decrease in the lender's trust in alternative data providers and the borrower's trust in the financial organization.

For example, an unsubstantiated loan denial can negatively impact stakeholder loyalty.

Using transparent and explainable AI models, also known as "white box" AI, can help address these challenges.

Five common pitfalls in AI-based credit scoring

AI-powered credit scoring delivers clear wins in speed, accuracy, and fraud detection. But implementation comes with real risks that can hurt your results.

Explainability isn't the only challenge.

Understanding all the other risks helps you build systems that work while protecting your business and borrowers.

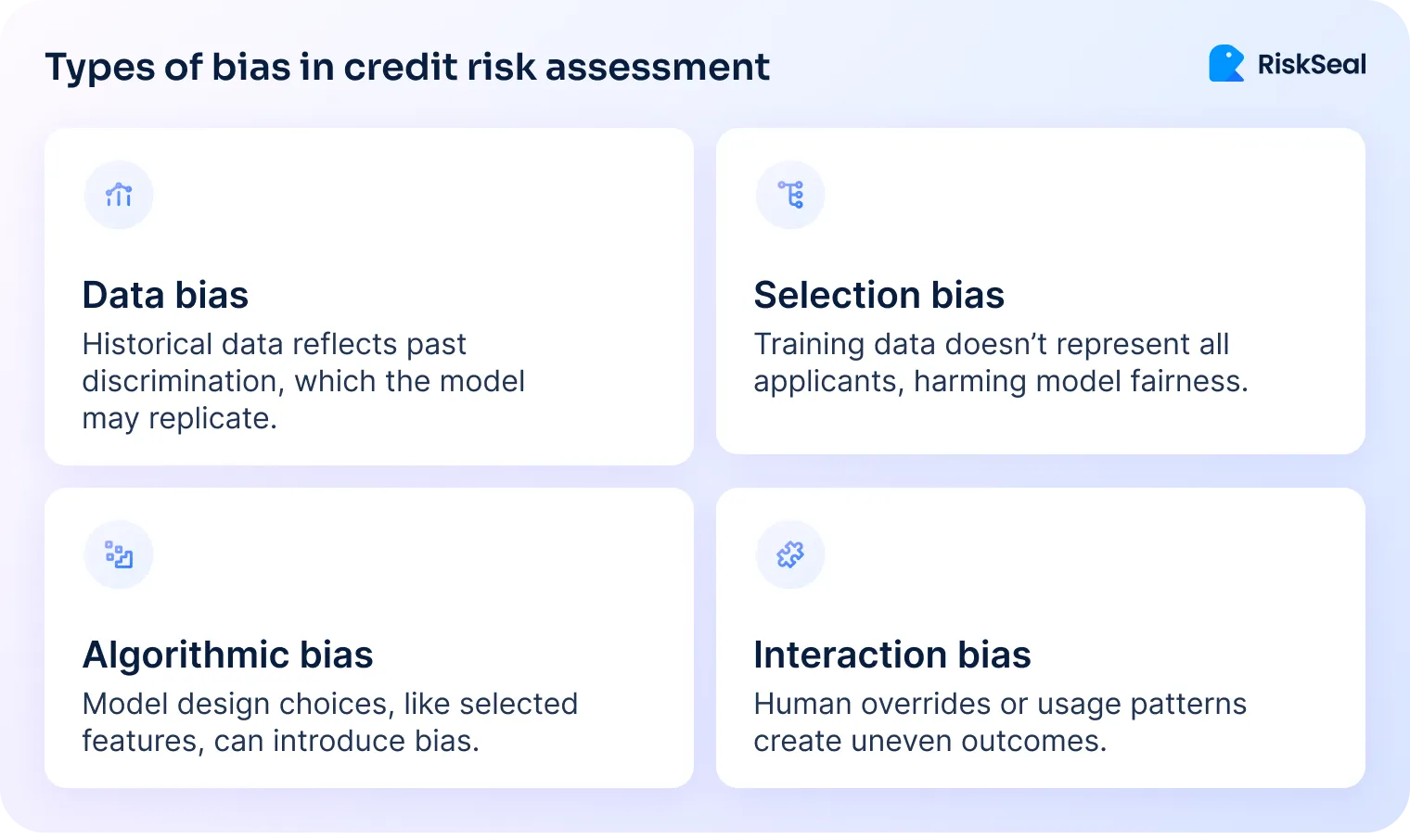

#1. Bias in training data leads to unfair outcomes

AI models learn from historical data. They can inherit and amplify biases hidden in that legacy information.

If your past lending decisions disadvantaged certain groups, your AI model will repeat those patterns.

This creates legal risk under fair lending laws. It also damages trust with the communities you serve.

How to fix it:

- Audit your training data regularly for demographic imbalances.

- Run bias detection tools during model development.

- Use fairness constraints when training models to ensure equal treatment across groups.

- Monitor live lending decisions to catch and fix disparate impact early.

#2. Data overload reduces accuracy

More data doesn't always mean better decisions. Chasing too many signals drives up costs without improving predictions.

Irrelevant data adds noise to your models. This noise can actually hurt accuracy. You end up making worse decisions while spending more money.

Data hoarding also creates operational drag. Credit risk teams waste time validating weak signals and investigating false positives.

They spend hours supervising model outputs instead of trusting automation. This defeats the purpose of AI – fintechs wanted efficiency but created more manual work.

How to fix it:

- Test which data sources actually improve your predictions.

- Build a cost-benefit framework that weighs each data source's value against its price.

- Start with core datasets and add alternative data from one source at a time.

- Measure the impact at each step.

- Remove low-value features to keep your models lean.

#3. Privacy risks from behavioral data

Social media activity, browsing patterns, and mobile usage create privacy concerns. Using this data without clear consent violates regulations. It also erodes consumer trust.

Even legally collected data can feel invasive. This perception damages your reputation and relationships with borrowers.

How to fix it:

- Get explicit consent and explain what data you collect.

- Follow data minimization – only collect information that directly affects credit decisions.

- Show borrowers which data influenced their scores.

- Build privacy policies that go beyond minimum requirements.

- Be especially careful when choosing an alternative credit data provider. Make sure you only partner with those who follow ethical data collection practices and interpret data in an auditable way.

#4. Model drift undermines decisions

Your AI learns from past patterns. But borrower behavior, economic conditions, and markets change constantly.

Models become outdated without regular updates. Their predictions lose accuracy over time. This drift leads to poor lending decisions, higher defaults, and missed opportunities with good borrowers.

According to research from MIT, ML model performance can degrade by 20-30% within just 12-18 months without retraining.

This means relying blindly on what used to work just fine some time ago is not an option for responsible fintech credit risk teams.

How to fix it:

- Monitor model performance metrics continuously.

- Flag when accuracy drops below acceptable levels.

- Retrain models on a set schedule using recent data.

- Run A/B tests before deploying new model versions.

- Set up alerts for sudden changes in approval rates or default predictions.

#5. Regulatory compliance gaps

Regulators are cracking down on automated lending decisions. They demand explainability, fairness, documentation, and human oversight.

Automation doesn't reduce your compliance burden. It often increases it. Non-compliance brings heavy fines and reputational damage that wipes out any efficiency gains.

How to fix it:

- Build compliance into your AI design from day one.

- Document your model development, data sources, and decision logic thoroughly.

- Keep humans in the loop for high-stakes decisions.

- Stay current with new regulations like the EU AI Act.

- Run regular compliance audits with external experts before regulators find problems.

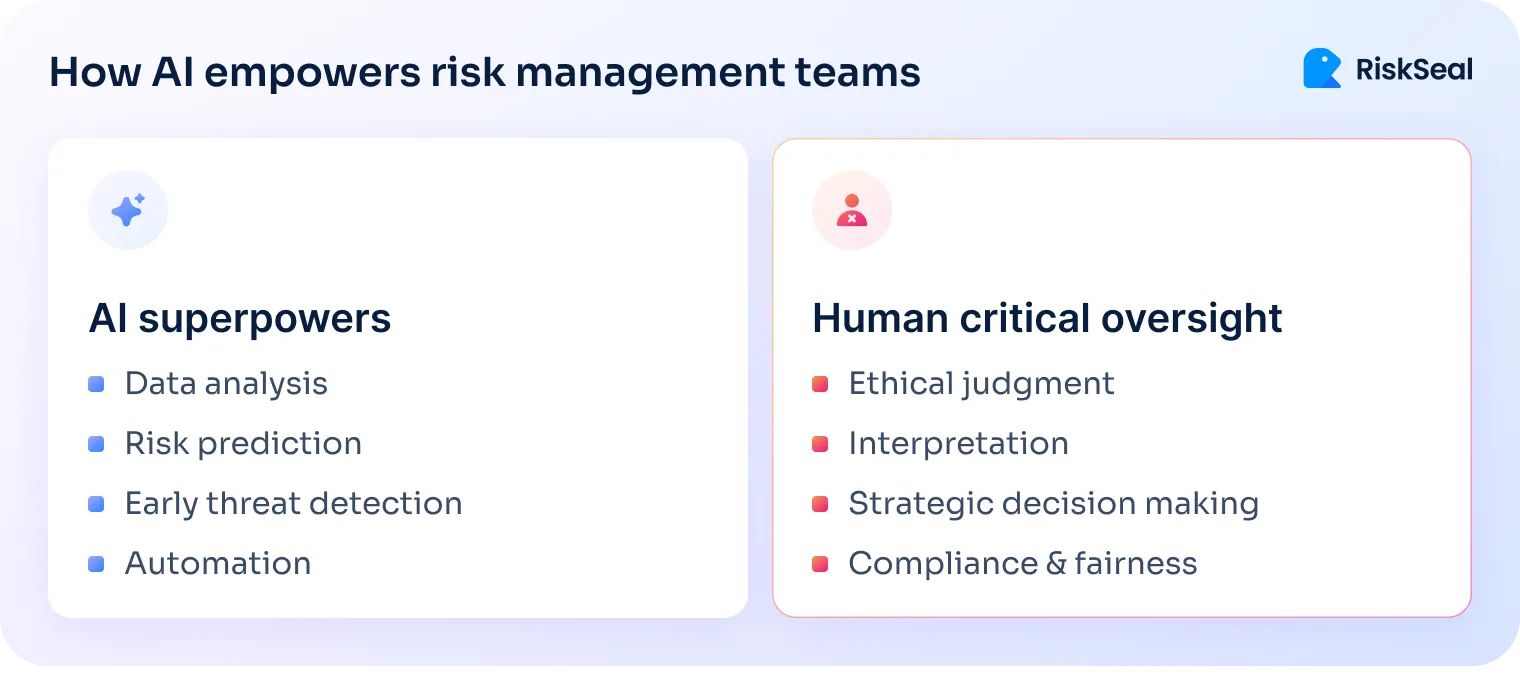

What this means for your credit risk team

Fixing these pitfalls isn't just about better tools or tighter processes. It requires changing how credit risk teams operate day-to-day.

Your action plan:

1. Stop treating your AI models as "set it and forget it" systems.

They need active management, regular challenge, and a willingness to question what's working.

The teams that succeed here build skepticism into their workflow. They expect drift, anticipate bias, and plan for regulatory shifts before they happen.

2. Move away from the "more data always wins" mentality.

Your job isn't to hoover up every available signal. It's to find the specific data that actually improves decisions, then cut everything else.

This means saying no to vendors, challenging feature bloat, and defending lean models even when stakeholders push for complexity.

3. Shift from reactive compliance to embedded oversight.

Don't wait for audits or regulatory letters to review your systems.

Build regular check-ins where someone outside the model development team stress-tests your logic, questions your assumptions, and looks for gaps you've normalized.

4. Make transparency non-negotiable with data providers and internal stakeholders.

If you can't explain where data comes from or how it influences decisions, don't use it. Period. This protects you legally and operationally.

The competitive edge in AI-based credit scoring doesn't come from having the most sophisticated models. It comes from running adaptable risk operations that catch problems early and fix them fast.

RiskSeal’s commitment to ethical AI practices and mitigating biases

RiskSeal is a credit scoring system that utilizes artificial intelligence in various aspects of credit risk assessment with digital footprint analysis.

With AI, RiskSeal achieves the following results:

- Increased reliability of credit scoring and credit rating.

- Optimization of the speed of credit risk decisioning.

- Effective detection of fraud indicators at the loan application review stage.

- Accurate identification of anomalies in applicant behavior.

The main advantage of RiskSeal is that it uses transparent and explainable AI models. The solution not only provides clients with AI score results but also share the formula that explains them.

Use cases of AI in credit scoring

Due to the aforementioned challenges of implementing AI in credit risk assessment, financial organizations cannot fully abandon traditional credit scoring.

However, artificial intelligence is actively applied in various aspects of credit risk decisioning.

Anomaly detection

With machine learning algorithms, AI-based scoring systems can recognize atypical consumer behavior.

Based on the data obtained, the system automatically concludes a high risk of fraud.

In RiskSeal, anomaly detection operates by analyzing borrower data from the loan application. The system evaluates the applicant's digital footprint, identifying any discrepancies that may signal potential fraud.

Name matching

The name matching technology allows for the comparison of the potential borrower’s name provided in the loan application with names in other sources, such as social media profiles.

Any discrepancies may suggest an attempt to falsify data.

RiskSeal's name-matching technology offers a distinct advantage by accounting for linguistic and cultural variations in name spellings. This enables more accurate identification across diverse populations.

For instance, the system can recognize variations like Виктория (Russian), Victoria (English), and Wiktoria (Polish) as the same name.

Additionally, it can identify names written in Arabic script or hieroglyphs, enhancing accuracy across different languages and alphabets.

Face recognition

This AI-based technology is widely used for identity verification. It enables the comparison of multiple user photos to verify whether they depict the same individual.

If the identity of the images cannot be confirmed, the applicant will be assigned a high-risk level.

E.g., RiskSeal's face recognition technology enhances the efficiency of identity verification for potential borrowers. It helps reduce costs associated with Know Your Customer (KYC) checks by filtering out unreliable borrowers at the application stage.

In summary, RiskSeal Digital Credit Scoring system utilizes AI to assess potential borrowers, offering a range of alternative data for lenders and conducting in-depth analyses of consumer digital footprints.

At first glance, interpreting this data can be quite challenging. However, modern AI models allow for the extraction of valuable information for scoring models.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

.svg)

.webp)