6 Common Myths About AI in Credit Scoring Debunked

Discover and debunk six common misconceptions about AI in credit scoring and see how RiskSeal helps modern lenders leverage AI safely and effectively.

The AI in Finance market was estimated at $38.36 billion in 2024. It is expected to reach $190.33 billion by 2030.

In credit scoring, AI models have already shown a 13% boost in accuracy compared to traditional methods.

Still, many lenders hold back, often due to misconceptions. This article breaks down six common myths about AI-powered credit scoring and how RiskSeal addresses them in practice.

Myth 1. AI is too immature for regulatory-grade decision-making

Reality: AI has decades of institutional use; what matters is governance.

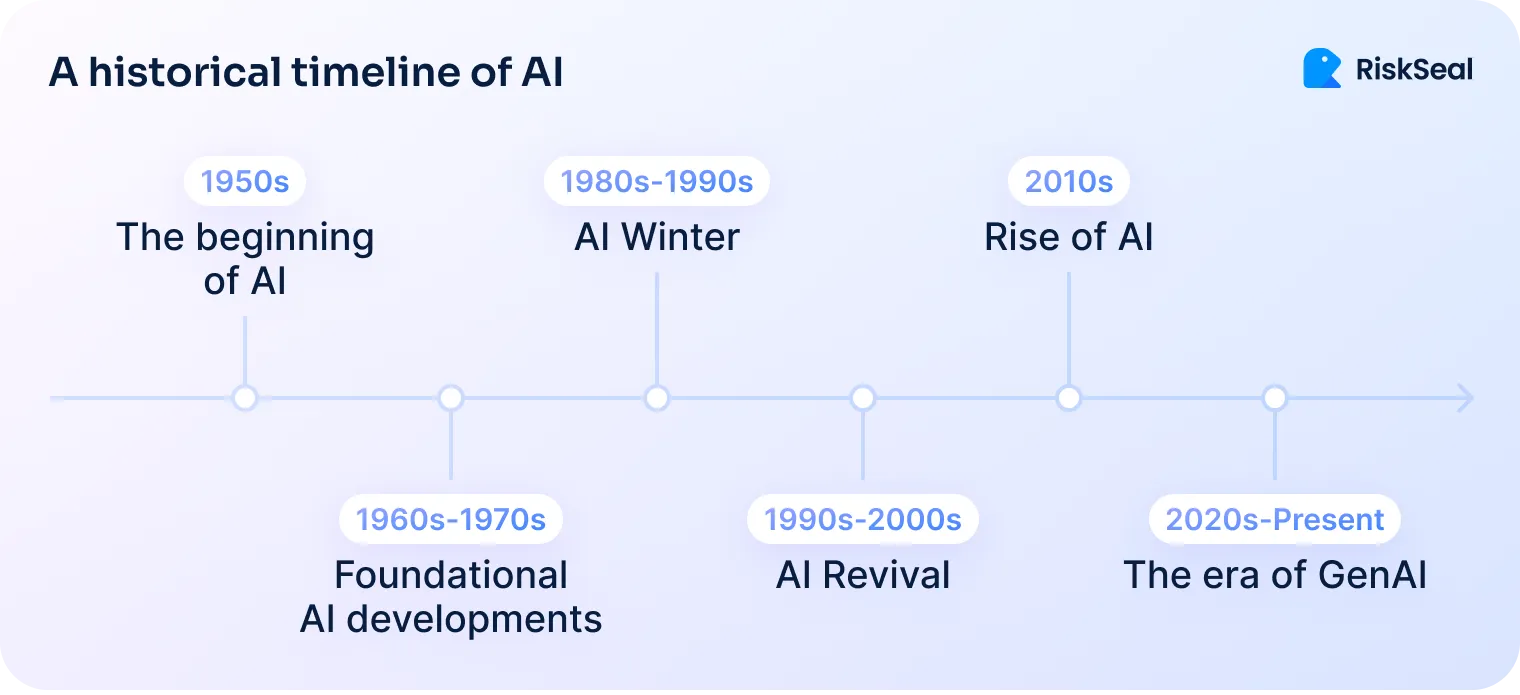

AI might seem like a new addition to credit operations, but the core ideas have been around for decades. Alan Turing proposed machine-based problem solving in the 1940s.

By the 1980s, rule-based expert systems were already in use at financial institutions. Mostly, for loan evaluations and credit analysis.

In the early 2000s, ML models began assisting in fraud detection, alert systems, and transaction monitoring.

Today, financial institutions have many reasons to use AI.

It’s leveraged to:

- Flag suspicious or unusual transactions

- Screen loan and credit applications

- Detect real-time payment fraud

- Assess risk during client onboarding

- Monitor for identity theft and synthetic fraud

- Automate KYC and AML checks

AI isn’t new; it’s been around for decades. What’s changed is that it’s now easier to use and more powerful.

That’s why the real question isn’t whether the tech is “new enough,” but whether it’s used the right way.

To meet regulatory standards, an AI system needs to be well-documented, carefully monitored, and fully auditable. With the right controls in place, AI can be safer and more consistent than manual decision-making.

7 questions credit risk teams should ask their AI partner

Before partnering with AI credit scoring companies, it’s essential to go beyond the demo and dig into how their systems are governed.

These questions can help you evaluate whether a vendor’s AI is truly mature, compliant, and fit for regulated lending environments:

- How is the model validated and monitored over time?

- What documentation is available for regulators and internal audit teams?

- Can decisions be explained in a way that non-technical users understand?

- What governance frameworks are in place for model updates and retraining?

- How is data drift or model degradation detected and managed?

- Is there a clear audit trail for every decision the AI supports?

- Who is accountable for the model's outputs, and how is that defined?

A mature AI credit scoring solution isn’t just one that “works.” It’s one you can trust, explain, and defend.

Myth 2. All AI is the same; it’s just ChatGPT in disguise

Reality: Generative AI ≠ Predictive AI ≠ Prescriptive AI.

Not all AI works the same way or should be used for the same purpose. Grouping it all under one label leads to confusion.

Predictive AI is based on supervised machine learning. It looks at patterns in historical data to make forecasts. This is the kind of AI used when a lender asks, “What’s the likelihood this borrower will repay?”

Generative AI like ChatGPT is different. It’s useful for support tasks, but not built to make credit decisions. And shouldn’t be used for that.

Prescriptive AI goes one step further. It suggests actions based on predictions. It’s useful for personalization, but only when used alongside strong controls.

These models serve different functions, and mixing them up can create real risk.

At RiskSeal, AI Digital Credit Scoring is strictly predictive. It’s based on labeled outcomes and tested for accuracy. No self-learning, no generative shortcuts, and no hidden logic. Every prediction is designed to meet regulatory standards.

Myth 3. AI for credit scoring introduces more compliance risk than it solves

Reality: AI can be your compliance co-pilot.

Many lenders worry that AI makes credit decisions harder to explain and harder to defend. But the opposite can be true, if the system is designed thoughtfully.

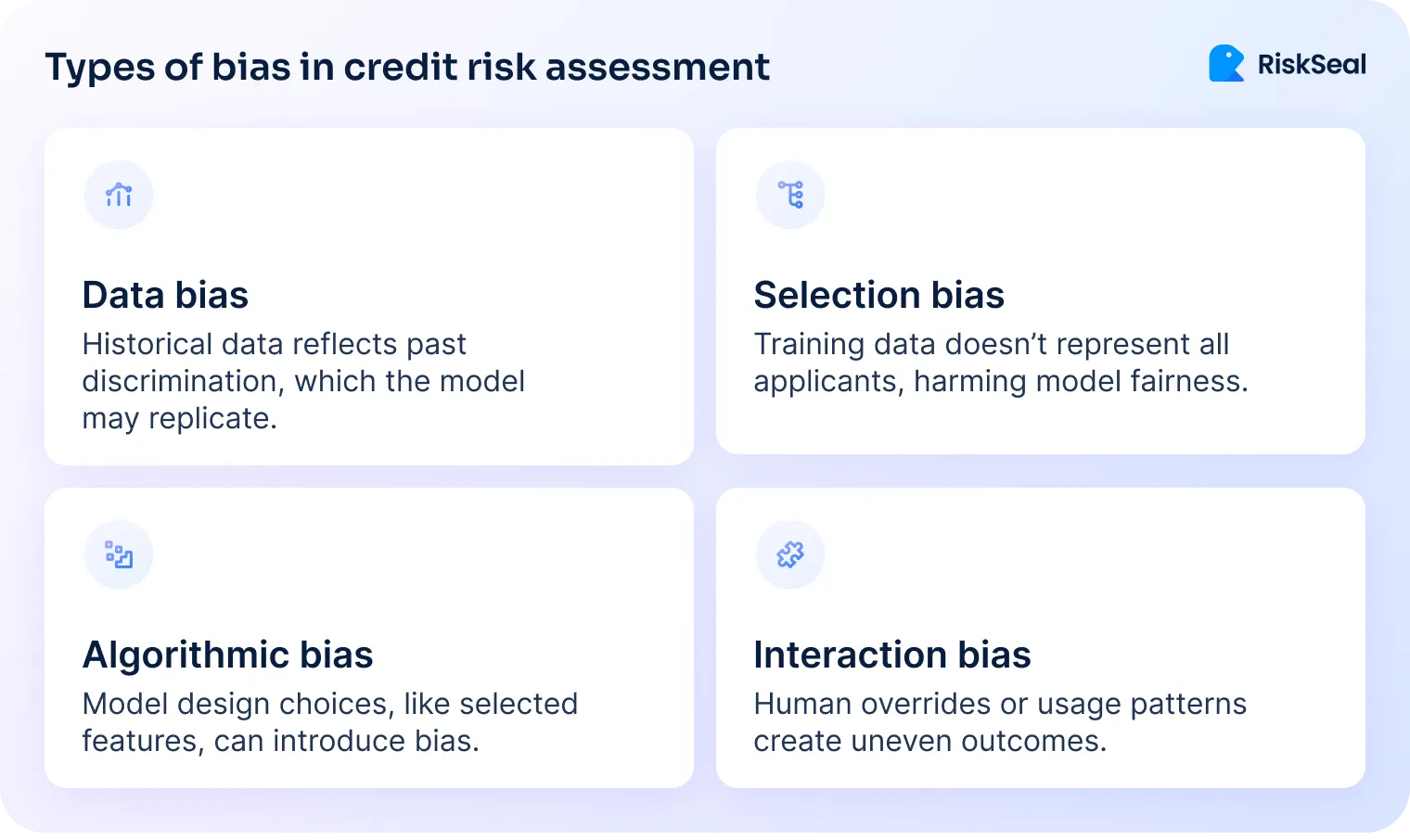

AI can enhance regulatory alignment by improving consistency, reducing bias, and enabling real-time oversight.

Traditional credit models often rely on rigid rules and limited data. This can lock out creditworthy borrowers and create blind spots in risk assessment.

In contrast, AI in credit risk can analyze a broader range of variables and uncover hidden patterns, including unfair ones. That makes it a powerful tool for proactive detection of AI bias in credit scoring.

Modern AI systems enable fairness audits before a model ever goes live. Techniques like disparate impact analysis help ensure that decisions don’t unfairly disadvantage certain groups.

When disparities are found, models can be adjusted early, not after regulatory intervention.

Transparency doesn’t mean compromising on performance. It means ensuring traceability and accountability in every decision.

Verity Credit Union shared its results after adopting Zest AI’s automated underwriting system. The model used broader data than traditional scores, helping assess borrowers more fairly.

This led to a significant increase in loan approvals across several underserved groups:

Fairer inputs lead to broader access without lowering standards.

Myth 4. Credit scoring AI decisions are unexplainable. What if I can’t defend them?

Reality: With “locked” AI models, explainability is built-in.

Not all AI works like a black box. Some models are designed to be clear and consistent from the start.

“Unlocked” models learn and change as they go. They’re flexible, but unpredictable. That makes them risky in regulated environments.

“Locked” models, on the other hand, are frozen at the point of deployment. They don’t shift or retrain themselves in production.

This means you can document, audit, and explain every output they generate.

That’s exactly what regulators expect.

Agencies like the CFPB, FDIC, and OCC aren’t banning AI. They’re asking for transparency.

If you use AI in risk assessment, you need to show how and why it works the way it does.

RiskSeal’s models are locked and fully documented. For every credit decision, there’s a traceable explanation of what data was used and how the score was calculated.

Systems like RiskSeal create an audit trail for each decision, complete with risk drivers and model logic summaries. Our clients receive outputs designed to be readable by both credit professionals and compliance teams.

Explainability isn’t just a bonus. It's a core requirement in real-world lending. Built-in transparency helps ensure AI decisions are aligned with regulatory expectations.

Myth 5. Only big banks can afford AI credit scoring

Reality: Modular AI tools are now accessible to mid-size and community lenders.

There was a time when only institutions with large data teams and deep pockets could use AI.

That’s no longer the case.

APIs and cloud-based deployment have changed the game. Lenders no longer need to build systems from scratch.

Instead, they can plug into modular tools that do one thing well, like scoring, fraud detection, or data enrichment.

Case study. A small lender scaled faster with AI-driven credit scoring

Due to a confidentiality agreement, we’re not able to name the lender. But the results and process below reflect real-world implementation.

What happened?

A community lender handling fewer than 10,000 loan applications per year wanted to modernize its credit assessment process.

Their team was small, and decisions were taking too long.

What went wrong?

Without automation, the team spent too much time manually reviewing applications. Especially those that never made it past KYC.

Approval rates were lower than expected, and the team couldn't scale without burning out.

How the challenge was tackled

The lender integrated RiskSeal’s AI-powered scoring tool into their existing workflow.

No data science team, no custom infrastructure.

The tool helped pre-screen applications using alternative data before reaching the KYC stage.

The result

Up to 70% of high-risk applications were filtered out early, reducing manual workload and speeding up approvals.

The lender operated more efficiently with the same team size. And stayed competitive with larger players.

Small lenders don’t need massive infrastructure to benefit from AI. They just need a clear goal and the right partner.

Signs you’re ready for AI-based credit scoring (even with a small portfolio)

Not sure if your team is ready?

If you find yourself saying “yes” to three or more of the points below, it's a strong signal that AI could help you work smarter, not just faster.

- You make repetitive credit decisions that could be automated

- You want to approve more good borrowers without increasing risk

- You already collect customer data, even if it’s stored in Excel

- You don’t have a full analytics team, but you're open to simple tools

- You want to reduce application processing time and free up staff

AI isn't just for big banks anymore. It’s a practical, proven tool for lenders of any size who are ready to do more with what they already have.

Myth 6. AI-powered credit scoring for banks is replacing risk professionals

Reality: AI is enhancing human credit judgment, not replacing it.

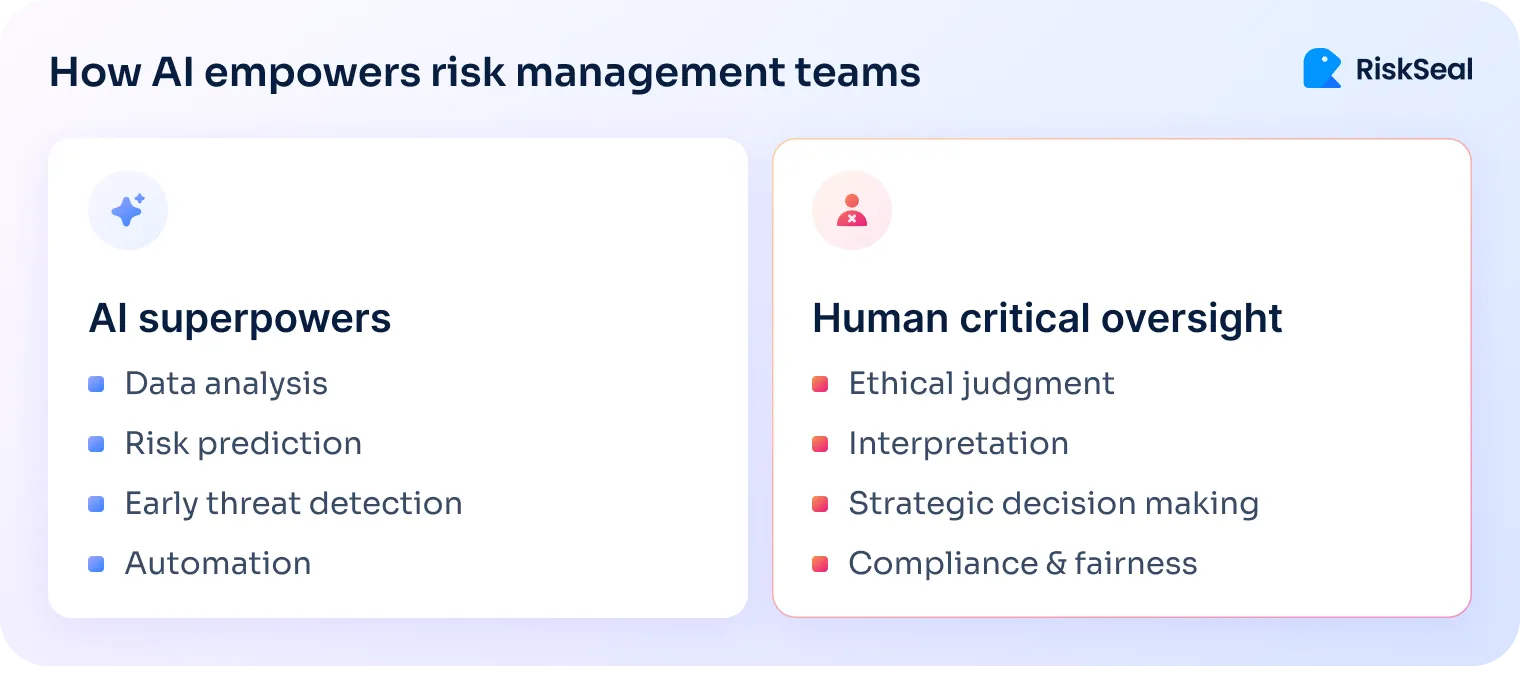

AI doesn’t replace expertise; it supports it.

Most risk teams spend too much time on repetitive decisions. That’s where automation helps most.

AI can screen low-risk applications, flag clear declines, and sort out the middle ground. This frees analysts to focus on complex, high-stakes cases – the ones that need human judgment.

Generative AI can also lighten the load. It can draft borrower summaries, highlight key risk factors, and even flag policy exceptions.

That means less time spent digging through data, and more time making real decisions.

But the final call still belongs to people. AI gives structure and speed, but risk teams guide outcomes.

Pro tip: Pair AI-based credit scoring with policy overlays to stay in control

Even the most accurate AI model needs boundaries.

Policy overlays serve as safeguards: business rules applied after a score is generated.

They help ensure lending decisions align with your institution’s risk appetite and compliance requirements.

Common use cases for policy overlays include:

- Flagging applicants below a certain income for manual review

- Auto-rejecting applicants from restricted regions

- Limiting approvals to specific loan types, regardless of score

- Enforcing loan caps or exposure limits

- Triggering additional checks for politically exposed persons (PEPs)

- Routing edge cases or exceptions to senior analysts

By adding these controls on top of AI outputs, you get the best of both worlds.

Automation delivers speed and consistency, while human oversight ensures fairness and accountability.

AI and credit scoring at RiskSeal

Throughout this article, we’ve addressed common concerns about AI: whether it’s too complex, too opaque, or too risky for credit decisions.

At RiskSeal, we’ve built our system to answer these concerns head-on.

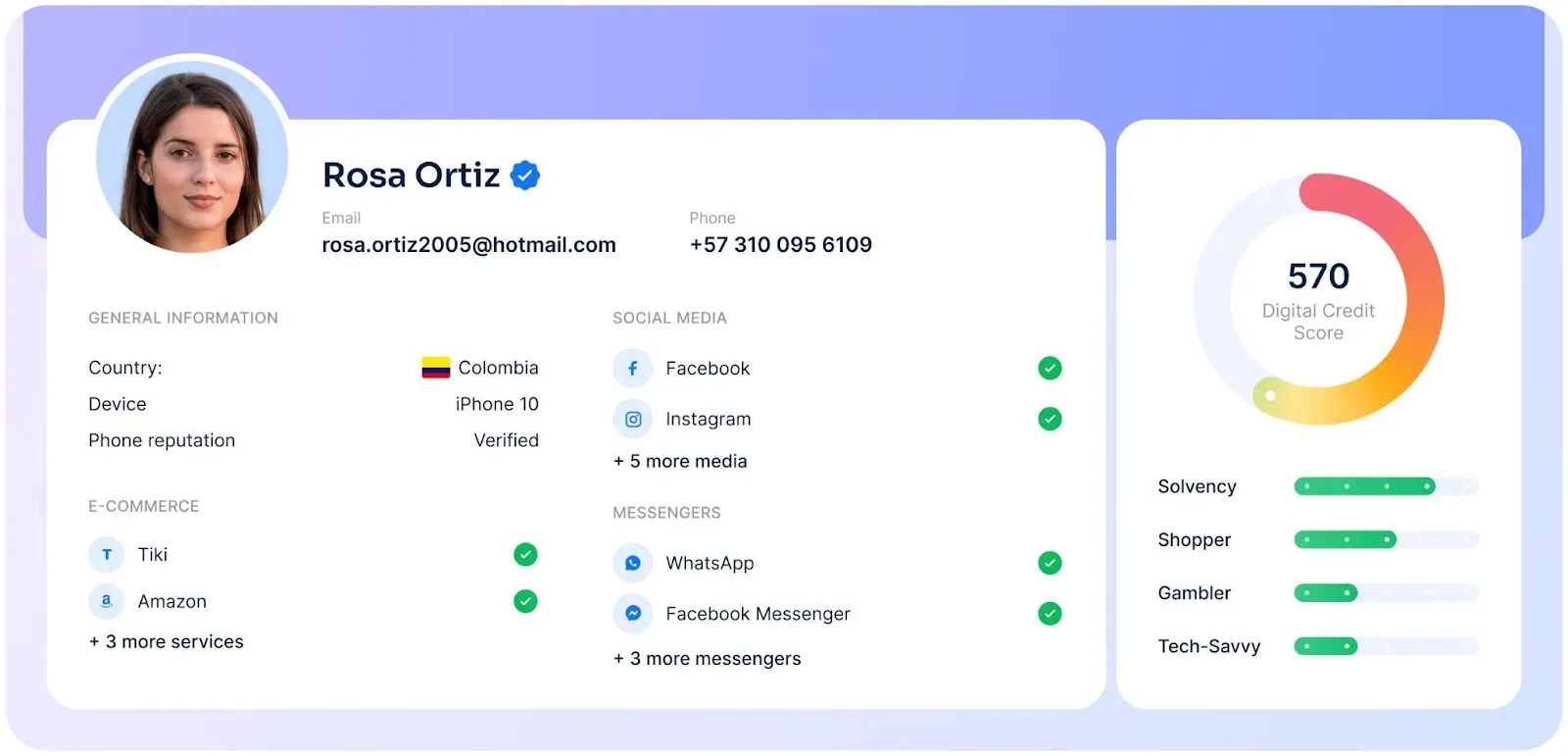

RiskSeal uses AI to enhance credit scoring through digital footprint analysis. Alternative data we provide enriches risk models with hundreds of real-time signals.

This leads to:

- More reliable credit scoring and risk ratings

- Faster decision-making and operational efficiency

- Early detection of fraud indicators at the application stage

- Accurate identification of behavioral anomalies

Speed and accuracy aren’t enough. Trust matters just as much. That’s why we use white-box AI models, fully explainable by design.

Every score generated by RiskSeal comes with a clear breakdown of the key data points and how they contributed to the final decision. There are no black-box shortcuts.

Our clients understand how each decision was made, and so can auditors or regulators.

This level of transparency also supports compliance from day one. Regulations increasingly demand that lenders explain automated credit decisions, especially when AI is involved.

RiskSeal makes this easy.

Alternative insights we provide are built to be auditable and aligned with responsible lending practices. For our partners, that means fewer compliance worries and a scoring system they can trust to grow with them.

Final thoughts on credit scoring using AI

Many misconceptions still surround AI in lending, but most come from misunderstanding, not reality.

At RiskSeal, we tackle these myths every day by building AI tools that are transparent, auditable, and built for real-world credit decisions.

If you're ready to cut through the noise and put AI to work for your risk team, we're here to help.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

.svg)

.webp)

.webp)