How to Assess Credit Scoring Models Enhanced with Alternative Data

Alright, you've changed your scorecards by using alternative data. How can you check if they work well? What metrics you should measure? Check out the article.

A survey by the largest credit bureau, Nova Credit, revealed that 59% of lenders incorporate alternative data into their credit scoring algorithms.

This practice enables them to lend to clients without a credit rating, thereby increasing consumer coverage by 23%.

In this article, we will discuss how to ensure that alternative data improves the credit scoring model and evaluate its effectiveness.

Importance of assessing the effectiveness of credit scoring models

Assessing the effectiveness of credit scoring models is crucial for several reasons:

- Risk management. By accurately assessing the likelihood of default, lenders can manage their risk more effectively. An ineffective model could lead to higher default rates.

- Adaptation to changes. Regular assessment helps in adapting models to changes, for instance, the impact of a global financial crisis or a sudden economic downturn on borrowers' ability to repay loans.

- Innovation and competitive advantage. Credit scoring models can gain a competitive edge by making more accurate lending decisions faster. This can lead to better customer satisfaction, lower costs, and higher profits.

The assessment of credit scoring models is fundamental to the integrity of the credit system. It supports financial stability, promotes access to loans, and ensures that the lending process is as efficient and unbiased as possible.

Criteria for assessing model improvements with alternative data

Not all alternative data provide lenders with equally high-quality information. They may vary in coverage, detail, specificity, relevance, and other characteristics.

To select high-quality alternative data for lenders, it is important to use only reliable sources that provide accuracy, compliance, and meaningful predictive signals. Below, you will find examples of criteria for alternative credit scoring models.

- Accuracy and predictive power. Assess the improvement in the accuracy of forecasts made by models using alternative data. FICO, a company that creates digital solutions for lending organizations, has also done a detailed study of credit scoring models. They focused on how accurately their models can predict outcomes. The research has shown that using their scoring model FICO® Score 10 T lenders can reduce default rates by 10% on credit cards, 9% on auto loans, and 17% on mortgages.

- Discriminatory power. Evaluate how the alternative data contributes to distinguishing between creditworthy and non-creditworthy borrowers. It is important to minimize false positive and false negative results. This helps avoid missed lending opportunities and funding unreliable borrowers.

- Coverage. Monitor how alternative data expand the scope of analysis by reaching underserved or new customer segments. It is crucial because 1.4 billion people worldwide are unbanked, according to World Bank data. Therefore, they cannot access credit through traditional scoring models.

- Specificity. Use high-quality alternative data for credit scoring that allows for individual risk assessment. Lenders can make more personalized credit decisions. For example, a customer with a high credit rating may get a higher credit limit and a lower interest rate.

- Orthogonality. Data from alternative sources should be unique and complement traditional data. In our previous article, we mentioned a study conducted by FICO. Its essence was in combining traditional and alternative data. The research results showed that supplementing traditional data with information from alternative sources allowed FICO to create a more powerful scoring model.

- Compliance. The use of alternative data must comply with regulatory standards and privacy laws. You should consider the requirements of the Fair Credit Reporting Act, Equal Credit Opportunity Act, and Gramm-Leach-Bliley Act.

- Location. Scoring models perform differently in various local markets. Therefore, it is necessary to assess alternative credit scoring models depending on the region where they will be applied.

Metrics important for credit scoring models evaluation

Many credit score metrics let you evaluate the effectiveness of a particular scorecard. Companies do not necessarily need to use all of them.

The list of metrics is determined depending on the strategy and goals of the lending organization. Below is a list of possible metrics used in the lending industry.

Back-testing and out-of-time testing to evaluate credit scoring model performance

There are two common tactics for evaluating a credit scoring model:

- Back-testing involves using historical data to check the model's credit scoring predictions against actual outcomes.

- Out-of-time testing evaluates the model's performance on a dataset from a different period than the one used to train the model.

This tactic helps to evaluate the stability and reliability of the model over time, especially in the face of economic changes or shifts in customer behavior.

Both methods aim to validate the predictive power and stability of the credit scoring model using historical data.

By employing this tactic, organizations can enhance the reliability of their credit scoring models, make informed lending decisions, and better manage their credit risk.

Back-testing and out-of-time testing stages

- Historical data selection. At this stage, you need to choose the input variables used by the credit scoring model and the actual lending outcomes. These outcomes could include loan defaults, timely payments, etc.

- Model application. Apply the credit scoring model to the historical dataset to predict lending outcomes.

- Performance evaluation. Evaluate the accuracy of the model's predictions by comparing the forecasted results with the actual outcomes. Common metrics used for evaluation include the Area Under the Curve (AUC) for the Receiver Operating Characteristic (ROC) curve, Gini coefficient, accuracy, precision, recall, and F1-score.

- Adjustments and optimization. Analyze the results of the back-testing or out-of-time testing, and if necessary, make adjustments to the credit scoring model. This may involve recalibrating the model, selecting different variables, or changing the model's parameters.

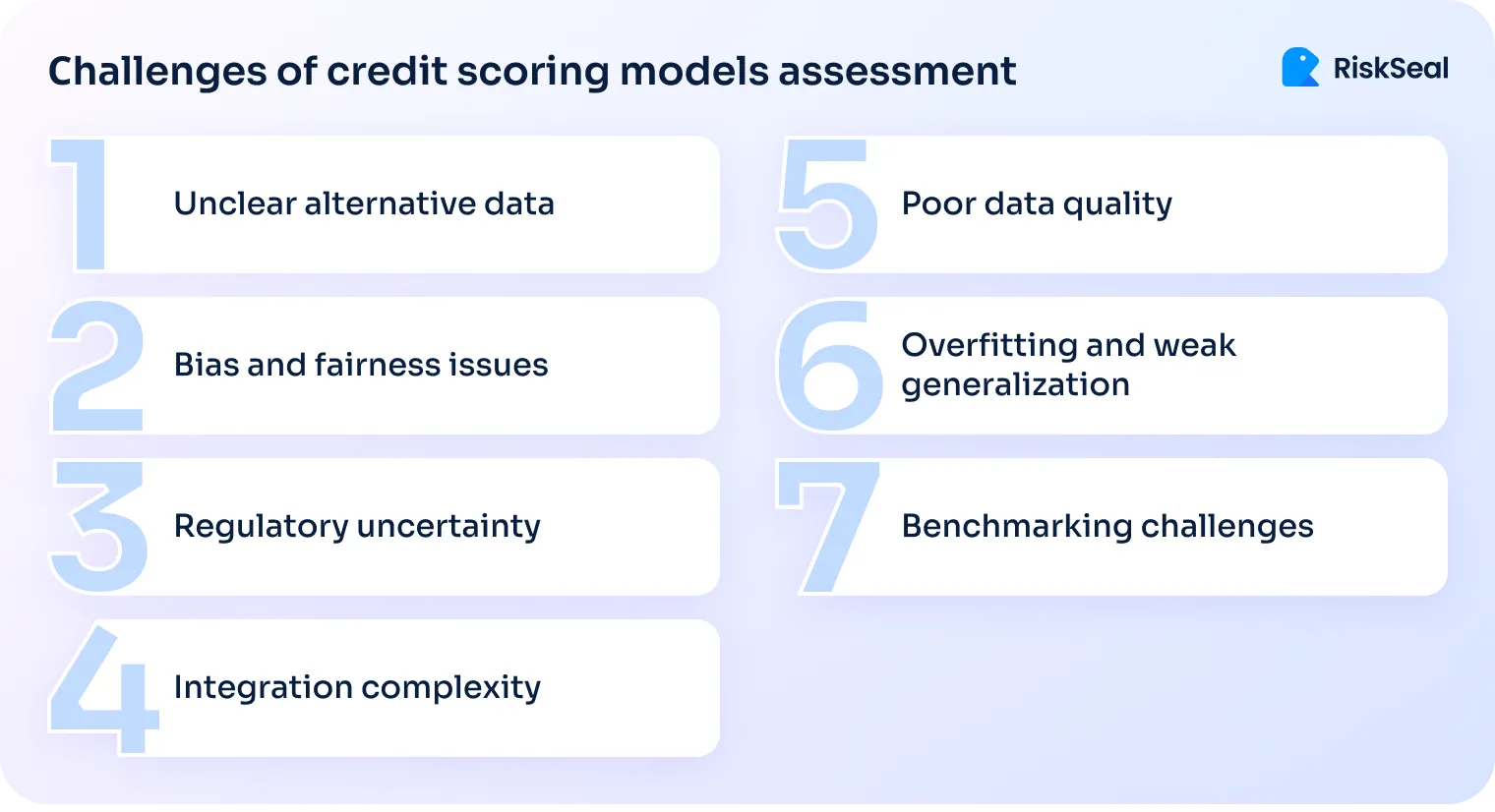

Top 7 challenges of credit scoring models assessment

Assessing a credit scoring model can be quite difficult.

This is because lenders face certain challenges when trying to use alternative data in their processes.

#1. Unclear nature of alternative data sources

Alternative data comes in many shapes and sizes. And that’s part of the problem.

Lack of standardization. These data sources vary significantly in structure, quality, and reliability.

For instance, utility payment records and social media activity follow entirely different logic and formats.

Interpretation varies. The same dataset can be understood differently across lenders, making consistent use a challenge.

Integration hurdles. Different update cycles, formats, and delivery mechanisms - from fintech apps to telecom and e-commerce platforms - complicate efforts to unify alternative data within decision models.

#2. Data quality and reliability issues

Missing or incomplete data. Not all potential borrowers have a digital footprint.

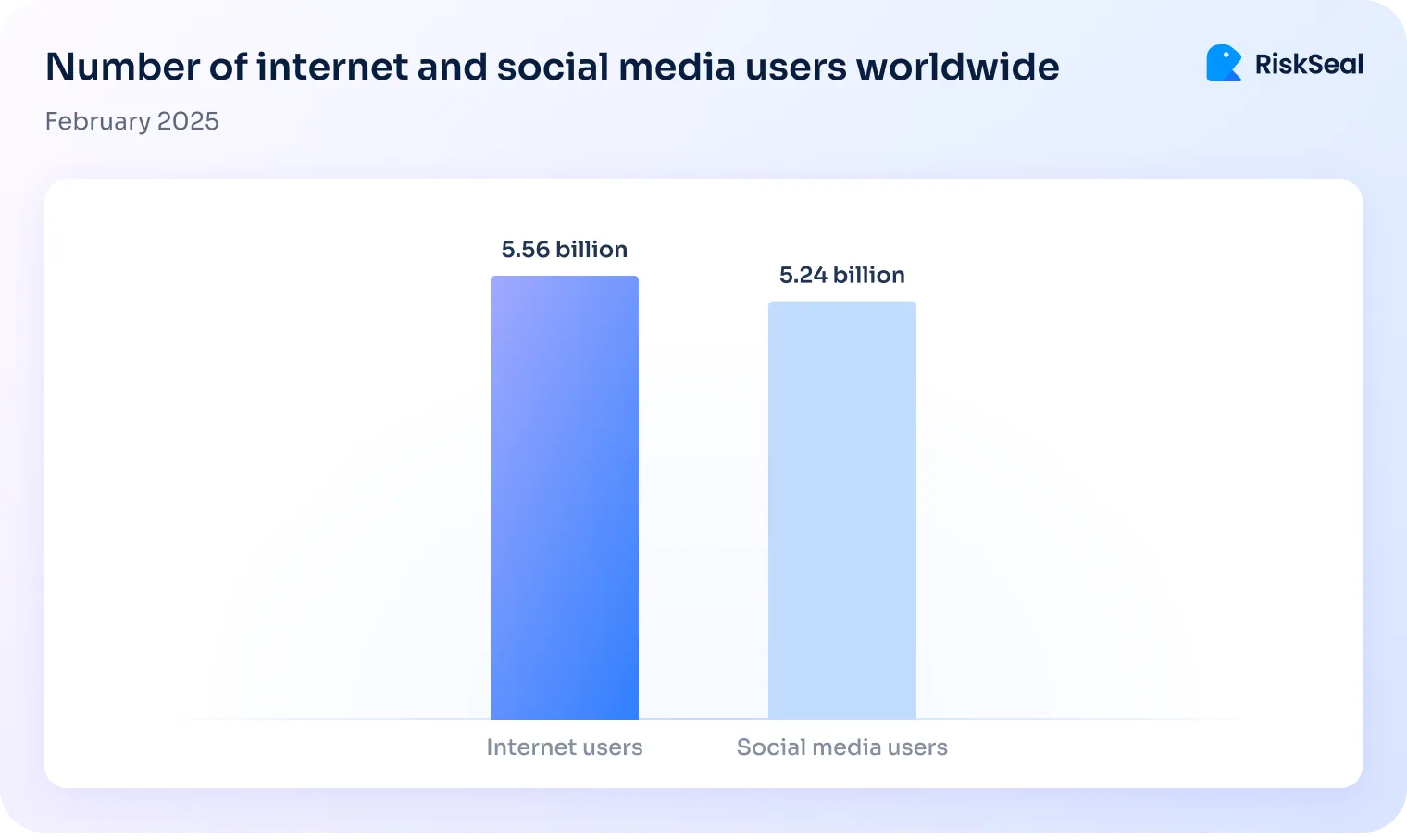

According to Statista, about 68% of the world’s population has Internet access. Even fewer use social networks - around 64%.

On the one hand, this is a considerable number.

On the other hand, there remain population categories for whom assessment using alternative data is not sufficiently reliable.

Relevance is not guaranteed. Many alternative data sources capture behavioral patterns that may have little or no correlation with actual credit risk.

Without a clear linkage, these signals can introduce noise rather than insight.

Data changes quickly. Behavioral data changes fast, which can make credit models less stable and less accurate.

#3. Bias, fairness, and ethical concerns

Hidden bias. Some types of alternative data can unintentionally disadvantage certain groups.

For example, people from low-income areas might have less access to digital services, which could unfairly affect their scores.

Proxy discrimination. Even data that seems neutral, like the kind of phone someone uses or which apps they’ve installed, can act as stand-ins for things like income, gender, or ethnicity.

That creates a risk of unfair treatment without realizing it.

Hard to explain. If a model lowers someone’s score, can you clearly say why?

With complex or opaque data, that answer isn’t always easy, which makes things tricky for both lenders and regulators.

#4. Model overfitting and generalization limitations

Too specific to be useful. With so much alternative data available, models can pick up on patterns that only apply to a small group.

For example, a model might learn that frequent ride-sharing app usage predicts good repayment, but that may not hold for everyone.

Struggles in new markets. A model trained on one group of customers may not perform well in a different region or demographic. What works in an urban U.S. market, for instance, might fail in rural India.

#5. Regulatory and compliance ambiguities

Lack of clear guidelines. Not all countries have clear regulations on the use of alternative data. This leads to legal uncertainty.

Different rules everywhere. Privacy and consent laws vary widely. For example, what’s acceptable in Brazil may not be permitted in the EU, especially when it comes to collecting and using personal data.

Hard to audit. If a credit model is a black box, it’s tough to explain how decisions were made. That’s a problem when regulators or auditors come asking for answers.

#6. Difficulty in benchmarking and evaluation

No common KPIs. Unlike traditional credit scoring, there’s no industry-wide standard for judging the performance of models built on alternative data.

Everyone measures success a little differently.

Moving targets. Alternative data, like app usage or online behavior, changes quickly. That makes it hard to build stable benchmarks or use static testing methods.

Too little history. Many of these data types are new. Without years of past data, it’s difficult to test how well a model would have worked in different market conditions.

#7. Integration and operational complexity

Heavy lifting required. Bringing in real-time or unstructured data, like text or mobile signals, often needs custom infrastructure and advanced data pipelines.

More teams, more complexity. Making alternative data work means close collaboration between data science, compliance, and business teams. That’s not always easy to coordinate.

Data delays. Not all alternative data is real-time. If updates lag, credit decisions can be based on outdated information, reducing their reliability.

Case study. Boosting credit model accuracy with RiskSeal’s data

AvaFin, a fintech firm, delivers lending products in Mexico. By offering credit to unbanked people, they’re reaching new customers, unlocking growth, and making finance more inclusive.

By working with RiskSeal, the client was able to access valuable data about potential borrowers using advanced digital and identity verification tools. These included:

- Digital footprint analysis across social media, messaging apps, and other online platforms.

- Email data, such as mailbox age and activity level.

- Phone number checks, including detection of disposable or virtual numbers.

- Identity verification with Face Match, Name Match, and location data.

Their next step was to evaluate the performance of the updated scoring models by tracking key metrics:

- Credit score

- Trust score

- Default rates

- Data coverage

- Verified clients

- Loan volume

How RiskSeal improves credit scoring models

RiskSeal has extensive expertise in improving credit scoring models. To assist in this matter, we provide digital lenders with:

- Data enrichment. The lenders receive unique information that they cannot get from traditional sources. By choosing RiskSeal, you can expect to receive a vast amount of data – over 400 digital signals.

- Digital footprints. We analyze borrowers' activity across more than 200 social networks and online platforms. We also verify information regarding your potential clients' subscription statuses.

The screenshot below displays the actual RiskSeal indicators. We acquired them by analyzing the performance of a client's scoring model after enriching it with alternative data.

The same applies to a digital credit score: the higher the credit score is, the lower the likelihood of default.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

Download Your Free Resource

Get a practical, easy-to-use reference packed with insights you can apply right away.

FAQ

How does RiskSeal increase the predictive power of credit scoring models?

RiskSeal gathers extensive arrays of alternative data from reliable sources to increase the predictive power of credit scoring models. This includes information from 140+ social networks and platforms.

Clients receive over 300 digital signals.

Why is assessing the effectiveness of credit scoring models important?

Assessing the effectiveness of credit scoring models is important because it helps manage risks, adapt models to changes, access innovations, and gain competitive advantages.

In other words, it supports the credit organization's financial stability and ensures the lending process's efficiency and impartiality.

What metrics are crucial for evaluating credit scoring models, especially with alternative data?

There are numerous credit score metrics crucial for evaluating alternative credit scoring models. Each company selects those that best align with its strategy and goals. Among them are false positives, false negatives, true positives, true negatives, default rate, etc.

How do false positives and false negatives impact the effectiveness of credit scorecards?

False positives and false negatives negatively impact the effectiveness of credit scorecards.

In the first case, the model incorrectly predicts default, leading to missed profitable lending opportunities. In the second case, the model fails to identify the real risk of default, potentially resulting in loans granted to non-creditworthy borrowers.

How do back-testing and out-of-time testing improve credit scoring model performance?

Back-testing allows evaluating the correspondence of credit scoring model forecasts to actual results. Out-of-time testing assesses the model's performance on a dataset from a different period than that used for model training.

Based on the results obtained, informed adjustments can be made to the credit scoring model.

What role do digital footprints play in improving credit scorecards?

Digital footprints provide additional data for assessing the creditworthiness of potential borrowers. They have broader consumer coverage than traditional data, thus allowing lending to clients without a credit history.

Is alternative data suitable for small lenders or fintech startups?

Yes. Moreover, this approach is particularly advantageous for fintech startups and small credit institutions. It facilitates lending to unbanked populations, reduces default rates, and strengthens fraud prevention efforts.

.svg)

.webp)

.webp)